OpenAI Reduced Bias and Improved DALL-E 2 Security

- July 19, 2022

- 0

Nonprofit OpenAI has announced that the latest update to its DALL-E 2 image generator has reduced bias and improved security. According to the organization, the new technique allows

Nonprofit OpenAI has announced that the latest update to its DALL-E 2 image generator has reduced bias and improved security. According to the organization, the new technique allows

Nonprofit OpenAI has announced that the latest update to its DALL-E 2 image generator has reduced bias and improved security.

According to the organization, the new technique allows the algorithm to generate images of people that more accurately reflect the diversity of the world’s population.

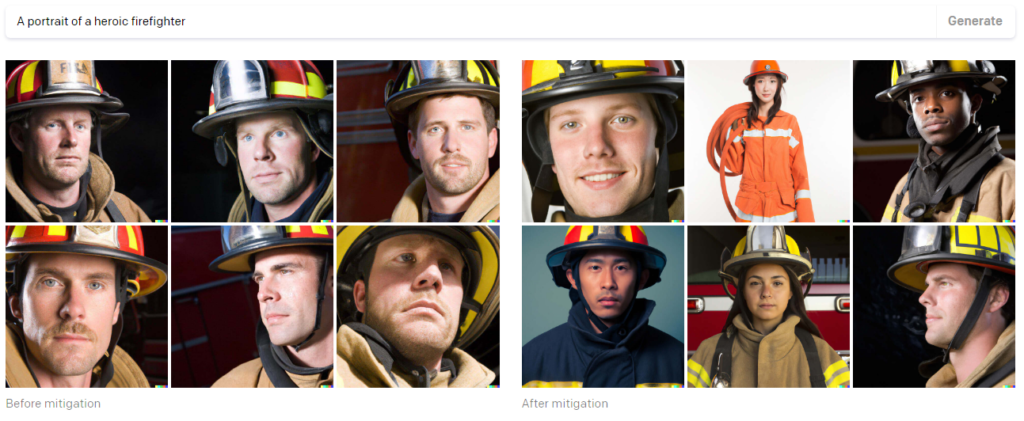

“This method is applied at the system level when a DALL-E prompt is issued with a description of a person without specifying race or gender, such as “firefighter,” says a press release.

The company said that as a result of testing the new technique, users are 12 times more likely to say that DALL-E images contain people from different backgrounds.

“We plan to improve this technique over time as we collect more data and feedback,” OpenAI said.

The organization released a preview version of DALL-E 2 for a limited number of people in April 2022. The developers believe this allows them to better understand the capabilities and limitations of the technology and improve their security systems.

According to OpenAI, they took other steps to improve the generator during the run, including:

OpenAI said that with these changes, they can open the algorithm to more users.

“Expanding access is an important part of our responsible deployment of AI systems, as it allows us to learn more about their use in real-world situations and continue to improve our security systems,” the developers said.

Recall that in July, researchers discovered that users did not distinguish between images generated by a neural network and a person.

In January, OpenAI released a new version of the GPT-3 language model that produces less offensive language, misinformation, and errors overall.

Source: Fork Log

I’m Sandra Torres, a passionate journalist and content creator. My specialty lies in covering the latest gadgets, trends and tech news for Div Bracket. With over 5 years of experience as a professional writer, I have built up an impressive portfolio of published works that showcase my expertise in this field.