NVIDIA ACE, the reinvention of NPCs with artificial intelligence

- May 29, 2023

- 0

Jensen Huang, founder and CEO of NVIDIA, was responsible for the keynote that kicked off Computex 2023, one of the most important tech trade shows of the year,

Jensen Huang, founder and CEO of NVIDIA, was responsible for the keynote that kicked off Computex 2023, one of the most important tech trade shows of the year,

Jensen Huang, founder and CEO of NVIDIA, was responsible for the keynote that kicked off Computex 2023, one of the most important tech trade shows of the year, which started today in Taipei, Taiwan and will continue until June 2nd. The fact that it was the technology chosen for the said inauguration is already quite significant and as it couldn’t be less, the company ensured that the said presentation lived up to expectations.

Most of the notices were directed, yes, to the professional sector, unlike what we are used to seeing at other types of events more focused on the end consumer, as is often the case at CES for example. So we learned about a very interesting development that will undoubtedly affect our time today, although it will do so through the improvement of the performance of data centers, infrastructures, etc. products and services that daily, as well as those that are yet to come.

However, there are advertisements among them one that will have a more direct impact on end users, and especially among the vast majority of PC users who spend at least part of their time gaming in front of it. An area where NVIDIA has a lot to say, where it has made major contributions to artificial intelligence. This technology area, the AI area, is known to be a priority for NVIDIA, something we had new confirmation of last week and today it is confirmed again with the NVIDIA CES announcement.

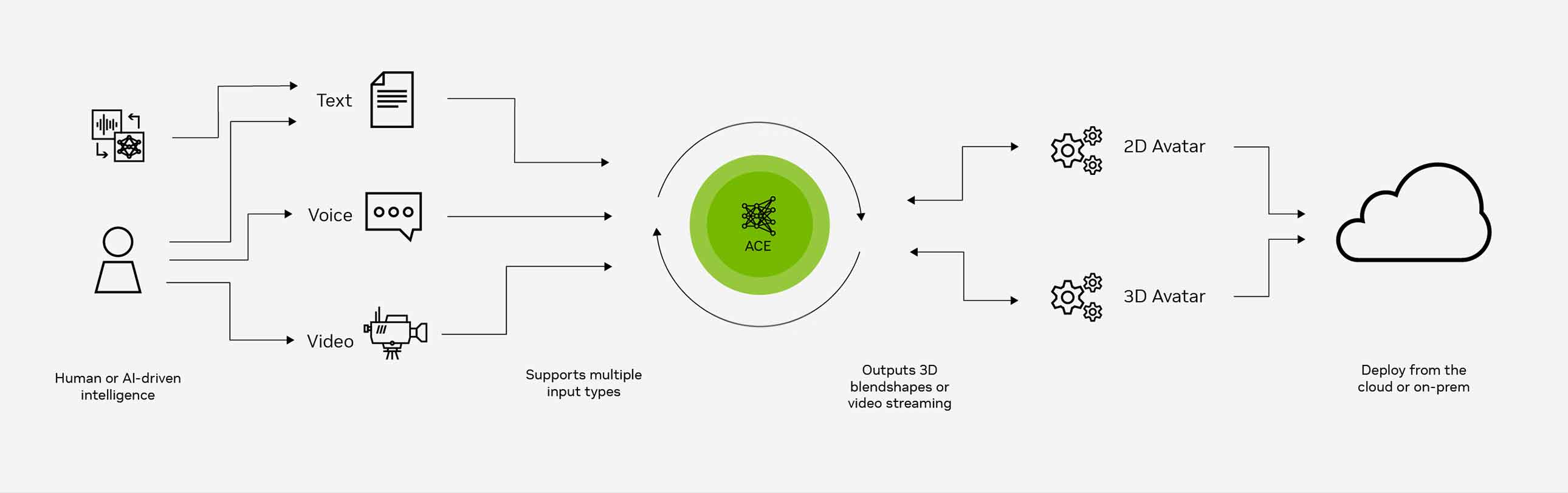

Avatar Cloud Engine technology is hidden behind the abbreviation ACEwhich is based on a generative model of artificial intelligence which will serve to significantly improve interactions with NPCs (non-playable characters) of titles that integrate this technology, by being able to maintain more natural dialogues. In the video above this paragraph, you can see a demo at Computex 2023 in which Kai, a playable character, enters a futuristic room where he has a conversation with an NPC (Jin). Jin’s reactions to Kai’s dialogues are generated in real time by an AI algorithm.

It’s true that when analyzed with a magnifying glass, Jin’s answers can seem a bit stiff, too formal (especially considering that many games these days compete to see which one uses the most aggressive language), but we understand that will adjust over time, so what’s really interesting is to think about the universe of possibilities that opens up with NVIDIA ACE, because just like in the demo we see how the NPC reacts to Kai’s phrases, nothing prevents the same technology can be used to respond to anything we want to say ourselves.

So if this AI model has a wide enough popup, it can be used remove some very annoying elements of the current NPC operation, such as constant repetition of the same dialogues, their lack of response to the context, etc. So a technology like NVIDIA ACE can put an end to all these problems, which in many cases completely disrupt the immersive experience of the game, and only in the best cases it translates into laughter.

In a complementary way (in the sense that these technologies complement each other), Avatar Cloud Engine has three basic components:

At the moment, no game has been announced that will integrate this technology, and we may have to wait a while for it. However, we can be sure that NVIDIA has already started the project about it with the developer, so it’s only a matter of time before NPCs become much more interesting than they are today.

More information

Source: Muy Computer

Donald Salinas is an experienced automobile journalist and writer for Div Bracket. He brings his readers the latest news and developments from the world of automobiles, offering a unique and knowledgeable perspective on the latest trends and innovations in the automotive industry.