Why Nvidia is (not too) expensive

- June 27, 2023

- 0

Jensen Huang, CEO of Nvidia, admits that instances with Nvidia hardware are expensive in absolute terms, but says they are worth the price. When you start training AI

Jensen Huang, CEO of Nvidia, admits that instances with Nvidia hardware are expensive in absolute terms, but says they are worth the price. When you start training AI

Jensen Huang, CEO of Nvidia, admits that instances with Nvidia hardware are expensive in absolute terms, but says they are worth the price. When you start training AI models with Nvidia’s framework, the price becomes even more interesting.

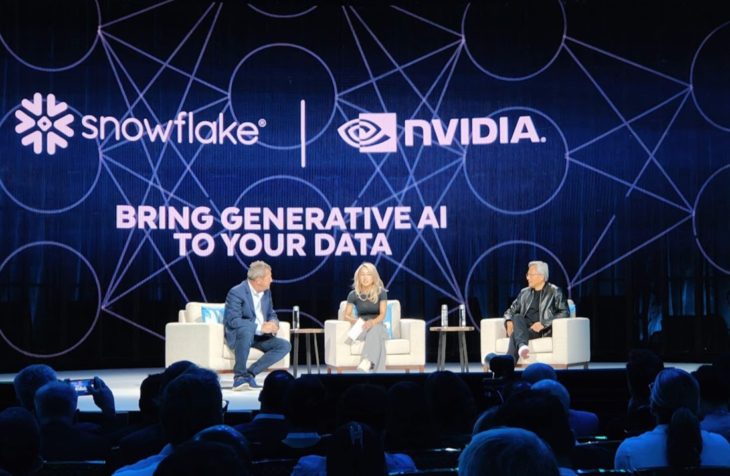

Nvidia doesn’t make processors, but GPUs and accelerators. Keep that in mind when you listen to Jensen Huang. Nvidia’s CEO declares CPUs are dead at the Snowflake Summit in Las Vegas. “Pure CPU scaling just isn’t possible anymore,” he says. “Anyone who invests more in processors alone hardly buys any additional computing power.”

According to Huang, it is clear that CPU development is entering its final chapter and that evolution comes at a crucial time. “AI is the greatest computing innovation we’ve seen,” he says. “A computer can now write software for itself, which is unprecedented.”

According to Huang, the AI breakthrough comes just when the CPU is exhausted, but that’s not a bad thing. Finally, AI workloads function differently than traditional workloads and require accelerators. “You have to accelerate every workload you can,” he points out.

According to Huang, how do you accelerate? Of course with Nvidia. “You have to be able to run everything anywhere on Nvidia.” With this in mind, Huang will attend the Snowflake Summit, highlighting a new collaboration between the two companies. So the future is in AI, powered by Nvidia GPUs.

“But it’s not free,” says Frank Slootman, CEO of Snowflake. Huang admits that Nvidia also wants money for its hardware. “Cloud instances with our accelerators are invariably the most expensive in the hyperscaler arsenal,” he confirms. “In absolute numbers, the costs are the highest.

But when you look at how many workloads these instances can handle, the story changes. When looking at price per workload, Nvidia instances offer the best total cost of ownership (total ownership cost) of each.

When you use Nvidia’s full stack, the story gets really interesting. Huang clarifies. “With NeMo you can train basic AI models with your own data. We trained these basic models in advance on supercomputers. We have invested tens of millions of euros for this. The finer training afterwards costs only a fraction.”

He compares basic models with talented school leavers. “A fundamental model is like a bright employee who has just graduated. No matter how smart they are, you always need to invest in onboarding to bring them into your business.”

This onboarding takes the form of further training on a fundamental model for proprietary data. According to Huang, while it costs money, it’s actually only a fraction of the total cost, which is true in the end result.

Huang justifies the additional costs for Nvidia hardware and software with clear arguments. He’s right, even if the argument lacks nuance. For example, the competitor AMD clearly shows that the CPU is far from exhausted and that new innovations definitely ensure performance increases.

Additionally, AI workloads are booming, but most enterprise workloads around the world are still very traditional. Speeding them up is not easy, although they benefit from faster and more efficient processors.

In the context of AI, Huang makes a strong case for Nvidia, both in terms of acceleration performance and the value of a foundational AI model within the NeMo framework.

Source: IT Daily

As an experienced journalist and author, Mary has been reporting on the latest news and trends for over 5 years. With a passion for uncovering the stories behind the headlines, Mary has earned a reputation as a trusted voice in the world of journalism. Her writing style is insightful, engaging and thought-provoking, as she takes a deep dive into the most pressing issues of our time.