The Lumi supercomputer helps build giant LLMs that speak European languages

- July 18, 2023

- 0

The computing power of the Lumi supercomputer, in which our country has also invested, is used by academics to quickly set up new, but also open LLMs for

The computing power of the Lumi supercomputer, in which our country has also invested, is used by academics to quickly set up new, but also open LLMs for

The computing power of the Lumi supercomputer, in which our country has also invested, is used by academics to quickly set up new, but also open LLMs for research.

Microsoft had to build a supercomputer in the cloud to develop the GPT-3 and GPT-4 models that support ChatGPT. The large language models (LLMs), however, are not open and transparent and remain the property of OpenAI. Researchers at the University of Turku in Finland therefore want to develop their own LLMs for use in science, which are also not based in English.

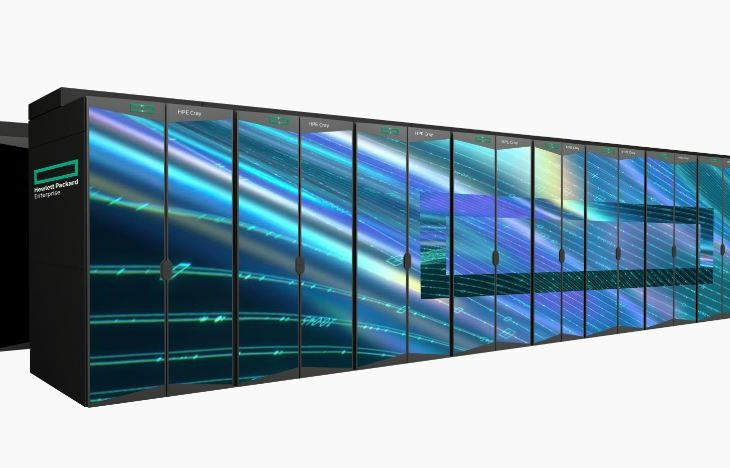

A supercomputer is also required for this. Luckily, there’s a real specimen nearby: Lumi. Lumi is located in Finland and has a computing power of 309 petaflops. Built by a consortium that includes our country, the system is currently the third most powerful in the world. The researchers use this computing power to train complex models themselves within a practical time frame.

At 192 nodes, it takes two weeks to train a model with 176 billion parameters. In comparison, GPT-4 would be a mix of four models, each with 220 billion parameters.

For the new LLMs, the researchers are working with Hugging Face, which has now established itself as a kind of GitHub for AI models. Lumi’s commitment is notable given that most of the AI models are trained using Nvidia hardware.

In particular, the powerful Nvidia Hopper H100 is a popular accelerator for training support. However, Lumi is based entirely on AMD components, with a combination of Epyc processors and Instinct MI250X GPUs. AMD worked with the researchers to integrate the software into the complex hardware platform.

The collaboration is important because it shows that even without Microsoft or AWS, the EU has the infrastructure to be competitive in AI development. At the University of Turku, the priority was to develop a Finnish LLM, but the aim is to develop so-called basic models for all official European languages.

Such a foundation model is a pre-trained model that you can use for concrete applications. Jensen Huang, CEO of Nvidia, compared it to a new employee who has a lot of knowledge but of course still needs a little training for his specific first job. The university is therefore working on a kind of European AI school where model graduates are trained in European languages. The goal is to build the world’s largest open model with full support for European languages.

Source: IT Daily

As an experienced journalist and author, Mary has been reporting on the latest news and trends for over 5 years. With a passion for uncovering the stories behind the headlines, Mary has earned a reputation as a trusted voice in the world of journalism. Her writing style is insightful, engaging and thought-provoking, as she takes a deep dive into the most pressing issues of our time.