Nvidia boosts the Grace Hopper AI superchip with blazing-fast HBM3e

- August 9, 2023

- 0

The Nvidia GH200, the Grace Hopper superchip, gets an increase in memory speed and a more spacious platform with 3.5 times more capacity. Nvidia does very well this

The Nvidia GH200, the Grace Hopper superchip, gets an increase in memory speed and a more spacious platform with 3.5 times more capacity. Nvidia does very well this

The Nvidia GH200, the Grace Hopper superchip, gets an increase in memory speed and a more spacious platform with 3.5 times more capacity.

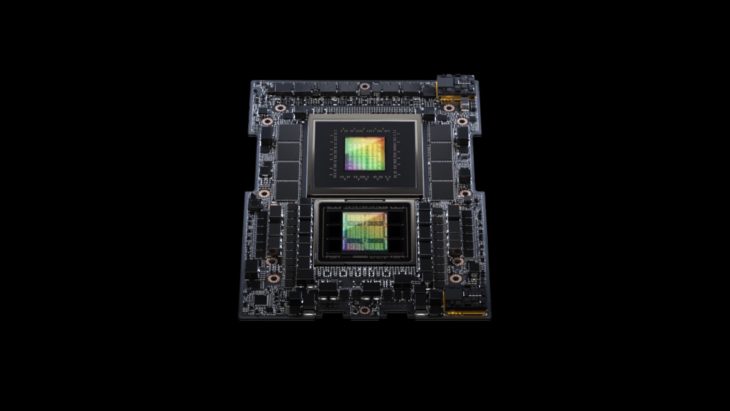

Nvidia does very well this year with the extraordinarily powerful Grace Hopper superchip. You can read all the details about this here. The powerful AI chip combines an ARM chip with Nvidia’s powerful GPU, the H100. It’s a wet dream for generative AI workloads, and it’s fetching a decent buck for Nvidia, which is struggling to keep up with demand. A single Nvidia H100 GPU already costs an average of $40,000 today.

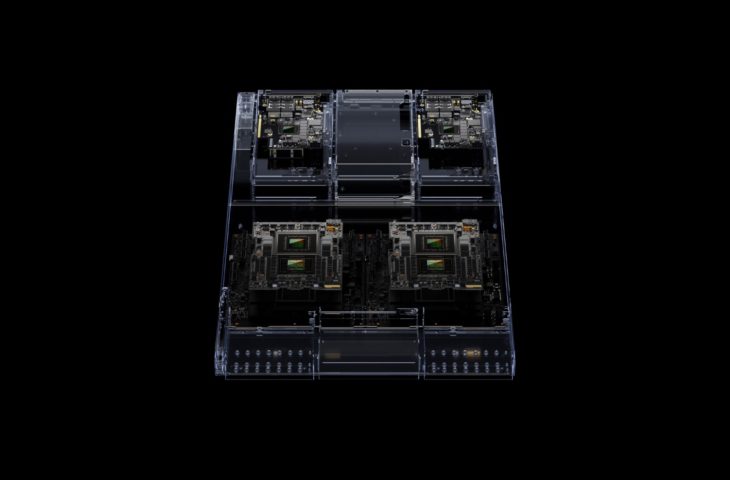

The Nvidia NH200 is not new, but the platform on which the manufacturer bundles two such chips is. With this, Nvidia focuses on complex generative AI workloads such as LLMs and vector databases. Numerous configurations are available. The top model has 144 Arm Neoverse cores, 8 petaflops of AI performance and a whopping 282 GB of HBM3e memory.

In cooperation with SK Hynix, Nvidia is the first company to work with HBM3e. Thanks in part to the smaller 10-nanometer process, this new version is around 50 percent faster than HBM3, which traditionally runs on an Nvidia GH200. This increased speed is important for LLMs and neural networks in general because their architecture can make the most of it.

In a dual configuration on the new GH200 Grace Hopper superchip platform, Nvidia states that the storage capacity is up to 3.5 times more and offers three times more bandwidth than the current generation. Overall, HBM3e delivers a combined bandwidth of 10 TB/s.

The new platform also forms the basis for a data center system called the Grace Hopper Compute Tray. Each system combines a single GH200 with Nvidia’s BlueField 3 and ConnectX 7 chips. The latter two chips are designed to speed up network traffic to and from the server. BlueField-3 can also handle a limited number of computational tasks.

A total of up to 256 Grace Hopper Compute Tray modules can be connected in a cluster. According to Nvidia CEO Jensen Huang, such a cluster can deliver 1 exaflop AI performance.

Source: IT Daily

As an experienced journalist and author, Mary has been reporting on the latest news and trends for over 5 years. With a passion for uncovering the stories behind the headlines, Mary has earned a reputation as a trusted voice in the world of journalism. Her writing style is insightful, engaging and thought-provoking, as she takes a deep dive into the most pressing issues of our time.