AI’s ecological footprint: a ticking time bomb?

- November 29, 2023

- 0

New AI applications will have a major impact on our daily lives, but also on the planet. How do we ensure that technological progress is not accompanied by

New AI applications will have a major impact on our daily lives, but also on the planet. How do we ensure that technological progress is not accompanied by

New AI applications will have a major impact on our daily lives, but also on the planet. How do we ensure that technological progress is not accompanied by ecological decline? In this dossier we look at the ecological footprint of generative AI and initiatives to limit it.

If there’s one technology trend that will dominate the news in 2023, it’s generative AI. The madness started back in December 2022 when OpenAI launched a chatbot called ChatGPT. The rest is history. All the major tech giants, most notably Microsoft and Google, have jumped on the bandwagon and today there is an announcement about a new AI application every day.

The debate about AI is often about what impact the technology will have on people. There are both positive voices that expect AI to make our lives easier, and skeptical voices that see technology primarily as a spreader of disinformation. What sometimes goes under the radar is the impact of AI on our planet. Running ChatGPT requires an arsenal of servers that consume valuable resources. As AI models now need to get bigger and better, they require increasingly powerful servers, which in turn use more of these resources. The AI boom risks becoming a ticking ecological time bomb.

Not everyone needs to be an expert, but to understand the environmental impact of AI, some basic knowledge of the technology is helpful. ChatGPT offers much more than meets the eye. The chatbot itself is just an interface that OpenAI has built on top of large language model (LLM) GPT-4. GPT, on the other hand, is ahead generative pre-trained transformer, where each word says something about the model. “Transformer” is a type of model that specializes in natural language understanding. “Pre-trained” means OpenAI has trained the model to do this, and “generative” refers to its content creation capabilities.

The AI boom threatens to become an ecological time bomb.

The “brain” of an LLM is the neural network. In a way, such a neural network also works like the human brain. Just as our brain automatically converts every sensory stimulus (input) into a physical action (output), the neural network ensures that your input (input) receives an appropriate reaction (output). To do this, the neural network accesses its internal database, which is created during the training phase, and independently links the input with the correct output according to the system. This process is called deep learning in technical jargon. Every possible combination between input and output in a network becomes one parameter called.

The rule of thumb for determining the intelligence of an AI model is actually surprisingly simple: the more parameters, the better. GPT-4 has no less than a trillion parameters, while its predecessor GPT-3 had 175 billion. The next generation GPT-5 can add much more.

None of this happens in a vacuum. AI models “live” in the data centers of the large hyperscalers, in the case of ChatGPT this is Microsoft. It’s no longer a secret that data centers are big energy consumers. Large data centers contain thousands of servers that must be powered 24/7 to keep connected Internet services online 24/7.

Training generative AI models is a very energy-intensive process. In order to read and process large amounts of data in the gigabyte range, the most powerful chips are required and server capacity in the data centers is required to accommodate this data. But it doesn’t end after training. Daily use of the system (Inference) also requires energy. Chipmaker Nvidia says that ninety percent of the energy consumption of its AI chips comes from inference.

Exactly how much ChatGPT consumes is more of a mystery. OpenAI uses information very sparingly in this regard. Scientists tried to calculate this themselves and came up with 1,287 gigawatts for training ChatGPT. This corresponds to the annual energy consumption of 120 average households. Each query to ChatGPT would cost approximately 0.001-0.01 kWh, much more than a Google search (0.0003 kWh). According to estimates, ChatGPT would be responsible for 8.4 tons of CO2 emissions annually Towards data science: twice as much as an average person. These are all estimates and not exact data.

It’s not just the absolute amount of electricity that matters, but also how efficiently it is used. There are positive signs here, as cloud data centers are proving to be more efficient. Google claims a PUE in its data centers (power consumption effectiveness) from 1.10, Microsoft 1.12. In traditional, on-premises data centers, this value is often 2 or more. A PUE value of 1 means that no more energy is used than is necessary for computing performance. Although global computing capacity has increased by 550 percent since 2010, energy consumption in data centers has only increased by six percent, we read in an article in Science magazine This is from 2020. Three years ago, the increasing need for AI was also seen as an ecological challenge for the future.

Until now, end users have not had to worry too much about IT energy consumption, but that will soon change. European legislation on sustainability reporting also takes emissions from external parties into account. This increases companies’ awareness of the consumption of external services and increases the pressure on OpenAI and Co. to share transparent figures.

Although global computing capacity has increased by 550 percent since 2010, energy consumption in data centers has only increased by six percent.

In addition to electricity, data centers also use our planet’s most valuable resource: water. Heavy processes cause the temperature in data centers to rise quickly. Water is then evaporated to cool the servers. Indirectly, AI also ensures water consumption through electricity generation and the production of servers and chips. Like energy consumption, technology companies are reluctant to reveal absolute quantities, but at large cloud companies like Microsoft and Google, annual water consumption is quickly rising to several billion liters per year, much of which is drinking water.

American researchers tried to calculate how much water ChatGPT “drinks”. Based on these estimates, the chatbot appears to have an unquenchable thirst. ChatGPT’s training would have required at least 700,000 liters of water. After a conversation with just five questions, ChatGPT has already drunk a half-liter bottle. As a result, Microsoft saw a 34 percent increase in water consumption in its data centers. At this current pace, and taking into account the rapid increase in user numbers, ChatGPT’s water consumption could reach six billion cubic liters by 2027. That’s four to six times as much as Denmark’s total water consumption.

While data centers have made great strides in recent years to use energy more efficiently, there is still much work to be done when it comes to water consumption. Microsoft reports a global WUE (water use effectiveness) of 0.49, meaning that the total number of liters of water used to cool the servers represents about half of the total kWh of electricity used to run the servers. Ideally, this value is as close to 0 as possible. The WUE value varies greatly from region to region. Google, Microsoft and AWS promise to be water neutral by 2030, but there is still a lot of work to do.

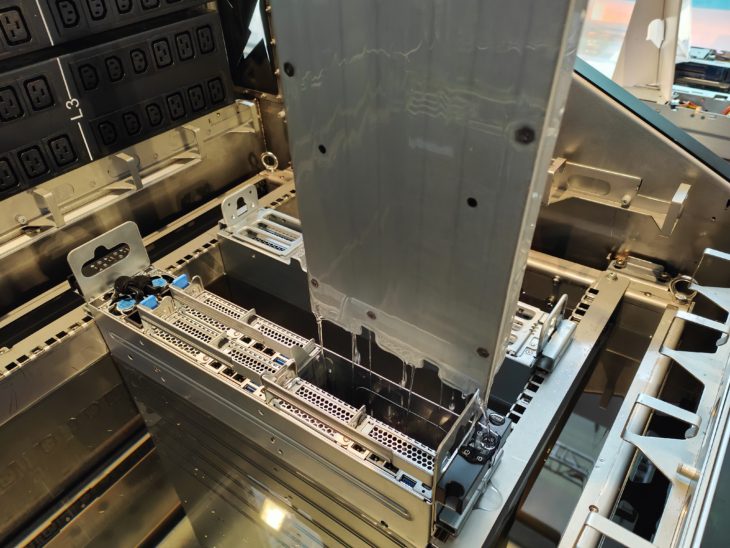

New technologies must ensure that water is used less and more efficiently. Immersion cooling is gradually finding its way into data centers. Instead of spraying servers with water vapor, they are submerged in a bath of liquid to keep them cool for longer. The Taiwanese server company Gigabyte is one of the pioneers of this technology.

How to make AI models more sustainable will become just as important a technology question in 2024 as how to develop even better models. There are several things that technicians need to consider. The key to sustainable AI seems to lie more than ever in the data center. Even more than the technologies used within the walls, the location of the data centers seems to be the deciding factor.

First and foremost, proximity to sustainable energy sources is a necessity. Wind turbines and solar panels will not be a luxury but a basic requirement for data centers in the coming years. The climate and proximity to sufficient water also seem to be self-evident criteria. With increasing drought around the world, there is no justification for placing a data center in the middle of the desert where there is barely enough drinking water for the population, even if there may be more solar energy there.

Google angered the Uruguayan population last summer when it announced plans for a new data center in the South American country, which was experiencing its worst drought in more than seventy years. In warm, tropical climates you will always need to use more water to keep your servers cool than in temperate climates. When you add up all the climatic factors, Northern Europe seems to be the promised land for data centers. Sweden and Norway are rubbing their hands to welcome the big tech companies.

After just five questions, ChatGPT has already drunk half a liter bottle of water.

But the technology itself will also play a role, starting with the chips that are installed in the data centers. New processors and AI chips are being manufactured with increasingly efficient technology, meaning they use less energy while maintaining consistent performance year after year. By optimizing the chips for AI work, power consumption is also reduced. Chip manufacturers such as Nvidia, Intel, AMD and Arm therefore have a great responsibility.

The third link is the algorithms. It is no longer acceptable for AI systems to keep getting bigger. The companies that build the models seem to be starting to realize this. OpenAI founder Sam Altman advocates for such a “growth freeze” and argues that research should not focus on how to achieve the same performance gains with fewer parameters, but rather that research should focus on not endlessly adding more parameters.

After all, you as a user also have a role to play. Remember that every question you ask ChatGPT, no matter how practical or banal, costs energy and water. We must learn to use this wonderful technology consciously. Sustainable AI can only become a reality if all links in the technology chain are on the same wavelength.

Source: IT Daily

As an experienced journalist and author, Mary has been reporting on the latest news and trends for over 5 years. With a passion for uncovering the stories behind the headlines, Mary has earned a reputation as a trusted voice in the world of journalism. Her writing style is insightful, engaging and thought-provoking, as she takes a deep dive into the most pressing issues of our time.