AWS and Nvidia are expanding their partnership to celebrate re:Invent 2023. Nvidia’s latest GPUs are coming first to the AWS cloud, along with underlying services for training LLMs.

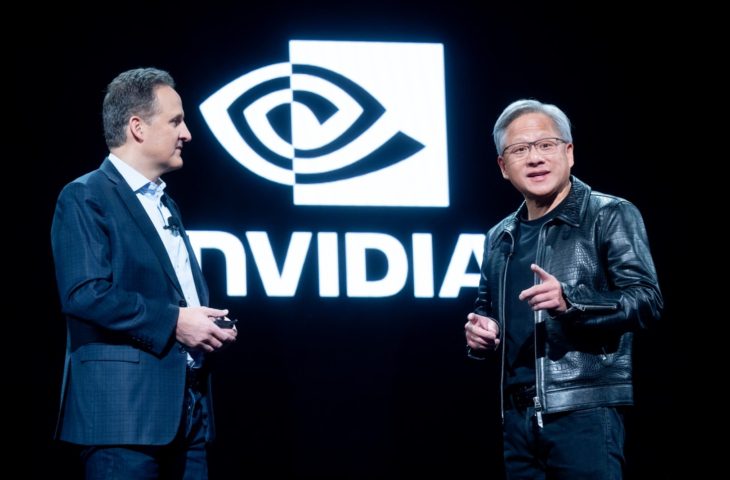

AWS highlighted its friendship with Nvidia during re:Invent 2023 by inviting Jensen Huang, who is now a popular guest at technology trade shows. The Nvidia boss personally announced that AWS would be the first to offer its latest GPUs via the cloud. This is the Nvidia L40S, but also the new eye-catcher from the GH200 Hopper GPU portfolio.

It’s nice to have the latest and most powerful GPUs, but that’s only part of the AI puzzle. Without a solid infrastructure, these GPUs simply cannot run. AWS will therefore set up “ultraclusters” where customers can scale to thousands of Nvidia GPUs at the same time. This allows companies to develop their own AI supercomputers. AWS and Nvidia themselves show the extent of this in “Project Ceiba”, a supercomputer with more than 16,000 GH200, good for 65 exaflops of computing power.

Microservices for AI

Now that your supercomputer is up and running, it’s time to start training your AI models. Nvidia and AWS also find each other here. AWS is the first major cloud provider to add Nvidia’s DGX cloud service to train AI models in its ecosystem. DGX Cloud offers enough capacity to support models with up to a trillion parameters. Additionally, there will be new EC2 instances tailored for Nvidia GPUs.

We already have a supercomputer and the necessary capacity in-house. The most important ingredient is still missing: data. AI models require data: quality is just as important as quantity. As you can imagine, Nvidia and AWS have found a solution here too. Nvidia is expanding its NeMo platform with NeMo Retriever, an “AI microservice” within the AWS cloud.

This microservice adds a special touch Retrieval Augmented Generation (RAG) to your newly designed LLM. This is a technical term that indicates that the data you use comes from reliable external sources. According to Huang, combining generative AI with RAG is the perfect recipe for developing “killer apps.” NeMo Retriever is offered through the AWS Application Marketplace.