US researchers have trained a giant LLM using the Frontier supercomputer. Previously, they only used eight percent of the available acceleration power.

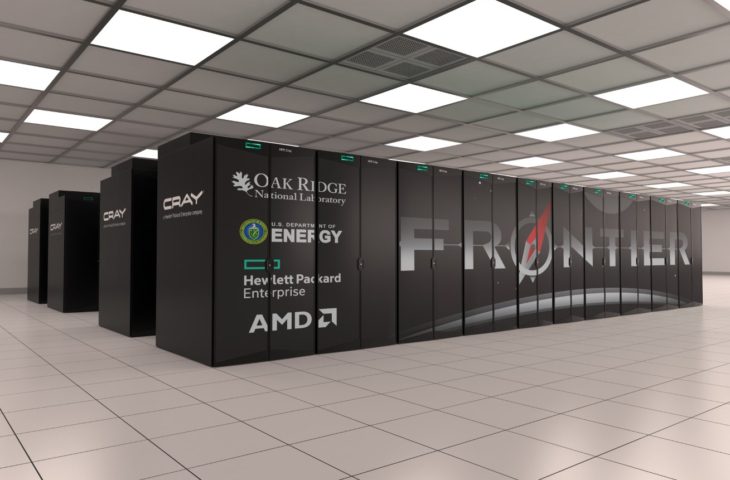

The world’s most powerful supercomputer and first exascale system, Frontier knows how to train Large language models (LLMs). This emerges from a study by the Oak Ridge National Laboratory in the USA, where the supercomputer is located. The researchers trained two LLMs, one with a trillion parameters and one with 175 billion parameters. To do this, they only used a fraction of the computing power available to Frontier.

Memory

Frontier features 7,472 AMD Epyc 7A53 processors supported by 37,888 AMD Instinct MI250X accelerators. Storage is the biggest bottleneck when training an LLM and the researchers needed about 14TB. 64 GB is available for each MI250X. Combining GPUs is the solution, but such immense parallelization of training workloads itself brings complications. Ultimately, the GPUs must communicate with each other very quickly and precisely.

Ultimately, the researchers used 3,072 of the 37,888 GPUs to train the models. Scaling was an issue because such work is typically done on Nvidia hardware within the CUDA ecosystem. The researchers found that AMD’s competing ROCm platform was a bit more spartan in comparison. Through their work, the scientists are giving the AMD ecosystem a nice boost.