Scientists looked at the world from the animals’ perspective

- January 24, 2024

- 0

A group of scientists have developed a device that allows you to mimic the way animals look at objects in the natural environment. The camera system records images

A group of scientists have developed a device that allows you to mimic the way animals look at objects in the natural environment. The camera system records images

A group of scientists have developed a device that allows you to mimic the way animals look at objects in the natural environment. The camera system records images in the RGB and ultraviolet spectrums. Experiments have shown that data obtained from the device correlates with the actual perception of animals. The new technique could help ecologists and filmmakers better understand how other species see the world in motion rather than static.

The human eye detects light within a relatively narrow range, everything between infrared and ultraviolet radiation. But science has long been interested in how animals see, because each species looks at the world in its own way.

For example, goldfish capture ultraviolet light, which helps them communicate and attract sexual partners. Praying mantis crayfish are known to distinguish circular polarization of light, and bees linear polarization. But previous experiments proving this were limited to the response of photoreceptors, and visual stimulation was largely dependent on lighting, changes in the environment, and movement of objects.

A team of American and British researchers has created a tool that can see colors dynamically, expanding the possibilities of sensory ecology. A scientific article about this was published in a magazine. PLoS Biology.

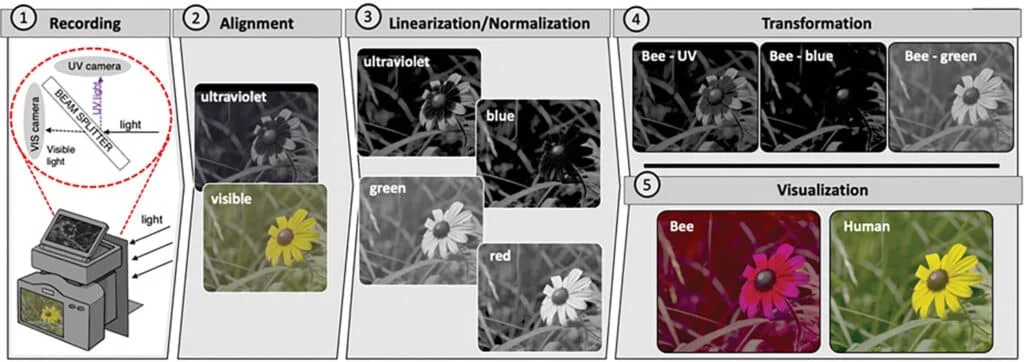

Biologists have developed a camera system that records images in four bands: ultraviolet, blue, green and red. The body of the device was printed on a 3D printer. The scientists noted that the system turned out to be flexible and, if desired, the camera could be reconfigured to the infrared spectrum or polarized light. The data received from the lenses is processed by an open source program that can be downloaded by anyone who wants to repeat the experiments.

To test the tool, the researchers compared the quantum efficiency of animal photoreceptors and the quantum efficiency of a camera system. Quantum efficiency indicates exactly how much of the photons in the corresponding spectrum are captured by the photoreceptors in the eye. Tests were carried out under natural, direct and indirect sunlight, as well as under the light of a metal halide lamp. Model animals were a honey bee and a conditioned bird sensitive to ultraviolet light.

Scientists observed that the resulting data had good consistency with reference colors, but the data was less accurate when shooting feathers, eggs, petals, and foliage. Biologists explained this discrepancy by the fact that perceived color depends on many factors: object shape, texture, physical color, and others.

“Modern methods of sensory ecology allow us to understand how animals can see static scenes, but often focus on moving objects to make important decisions (e.g., detecting food objects, assessing the display of a potential mate, etc.). Here, for ecologists and cinematographers, the We provide hardware and software tools that can capture and display the colors that animals perceive,” said study co-author Daniel Henley. The main advantage of the new device is that all color channels of the camera are recorded simultaneously, thus simulating the appearance of animals. In addition, this method of shooting allows scientists to understand that the view of animals in their natural environment is distorted by changing lighting, glare and refraction of light from objects (for example, from the iridescent surface of a feather or It will allow them to understand how it works underneath (from a flower swinging in the air). wind.

Source: Port Altele

As an experienced journalist and author, Mary has been reporting on the latest news and trends for over 5 years. With a passion for uncovering the stories behind the headlines, Mary has earned a reputation as a trusted voice in the world of journalism. Her writing style is insightful, engaging and thought-provoking, as she takes a deep dive into the most pressing issues of our time.