Artificial intelligence has stopped being the future and has become the present, a reality that makes our lives much easier and that we can now use locally thanks to Chat with RTX. Yes, you read that right, this solution works locally, which translates into complete security and privacyand in addition, it is so well optimized that it works without problems with GeForce RTX 30 and 40 GPUs, both desktop and laptop.

What is Chat with RTX

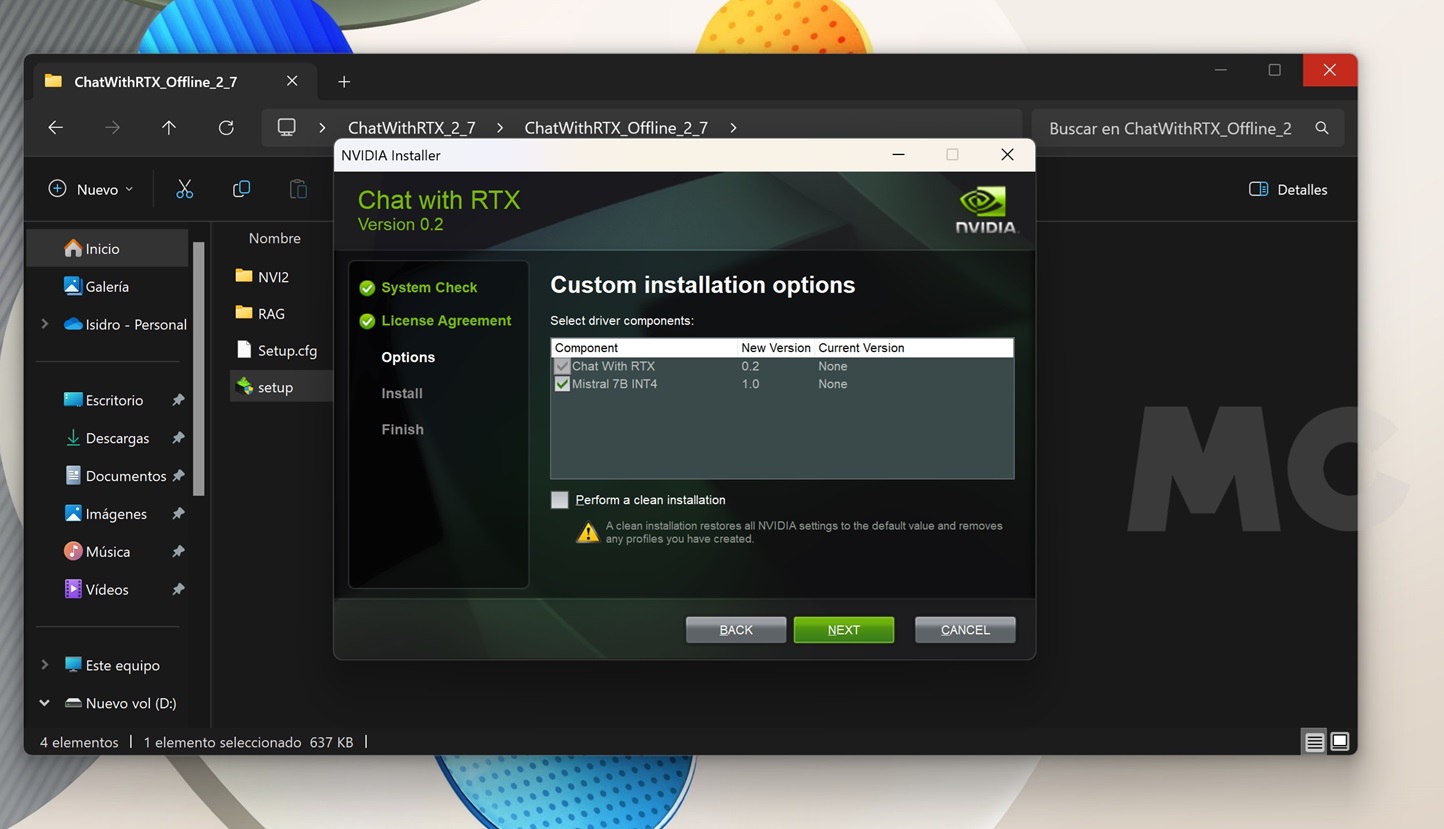

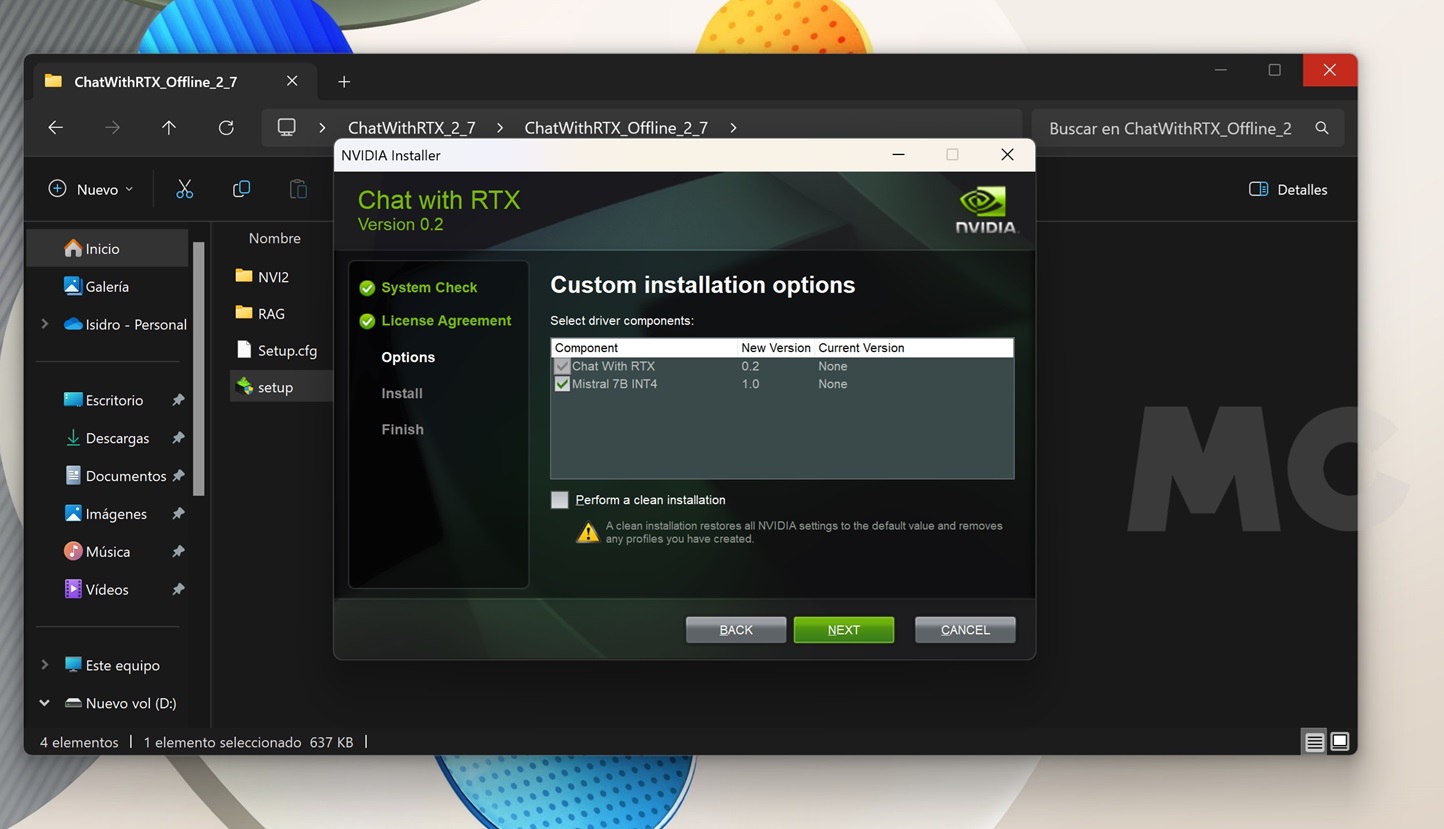

It’s a completely free technical demo now available for download that integrates a customizable chatbot. Can work with Call2 13B INT4 and also with Mistral 7B INT4, that is, with two of the most popular artificial intelligence language models today. The former uses 13,000 million parameters and the latter uses 7,000 million parameters (remember that one Anglo-Saxon billion equals 1,000 European millions).

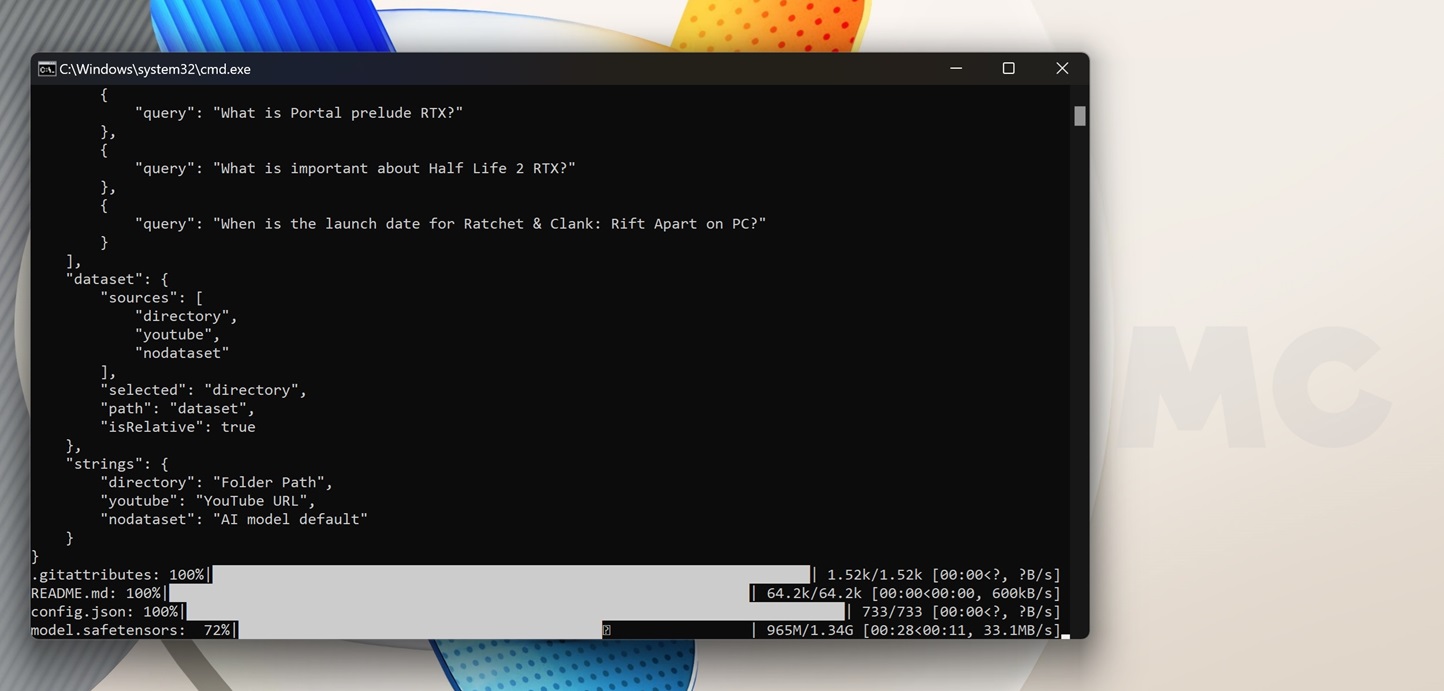

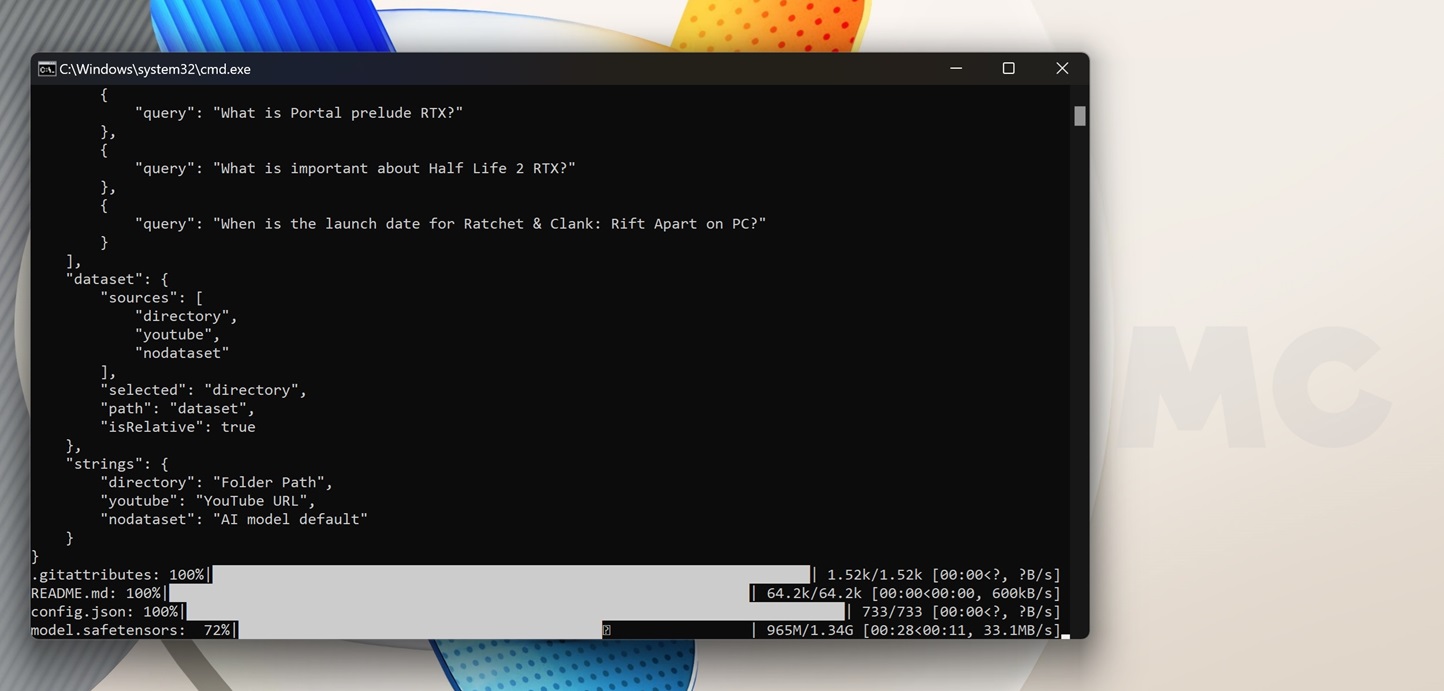

Chat with RTX uses Recovery Augmented Generation (RAG) technology to make this possible improve accuracy and reliability generative AI models with data obtained from external sources. The software was also used NVIDIA TensorRT-LLMan easy-to-integrate library that is able to optimally use computing power in AI-related tasks of Tensor cores that include GeForce RTX graphics cards and that can multiply the power by four.

What do I need to use it?

- A GeForce RTX 30 or 40 series graphics card with at least 8 GB of graphics memory.

- 100 GB free space. It may vary depending on the language models we install.

- Windows 10 or Windows 11 as the operating system.

- Graphics drivers updated to latest version available.

How chat works with RTX

Process It is really easy, and I believe that is exactly one of the great values offered by this technical demo, which brings artificial intelligence under the chatbot model even to less experienced users who use an automated installation and configuration process and simple and intuitive interface.

We need to download it using this link and start the installation process which as I said will be done in an automated way. Once done, a shortcut icon will appear on the desktop and double-clicking on it will open the command console, which will automatically take us to the browser tab that we have as default. There we can start enjoying it.

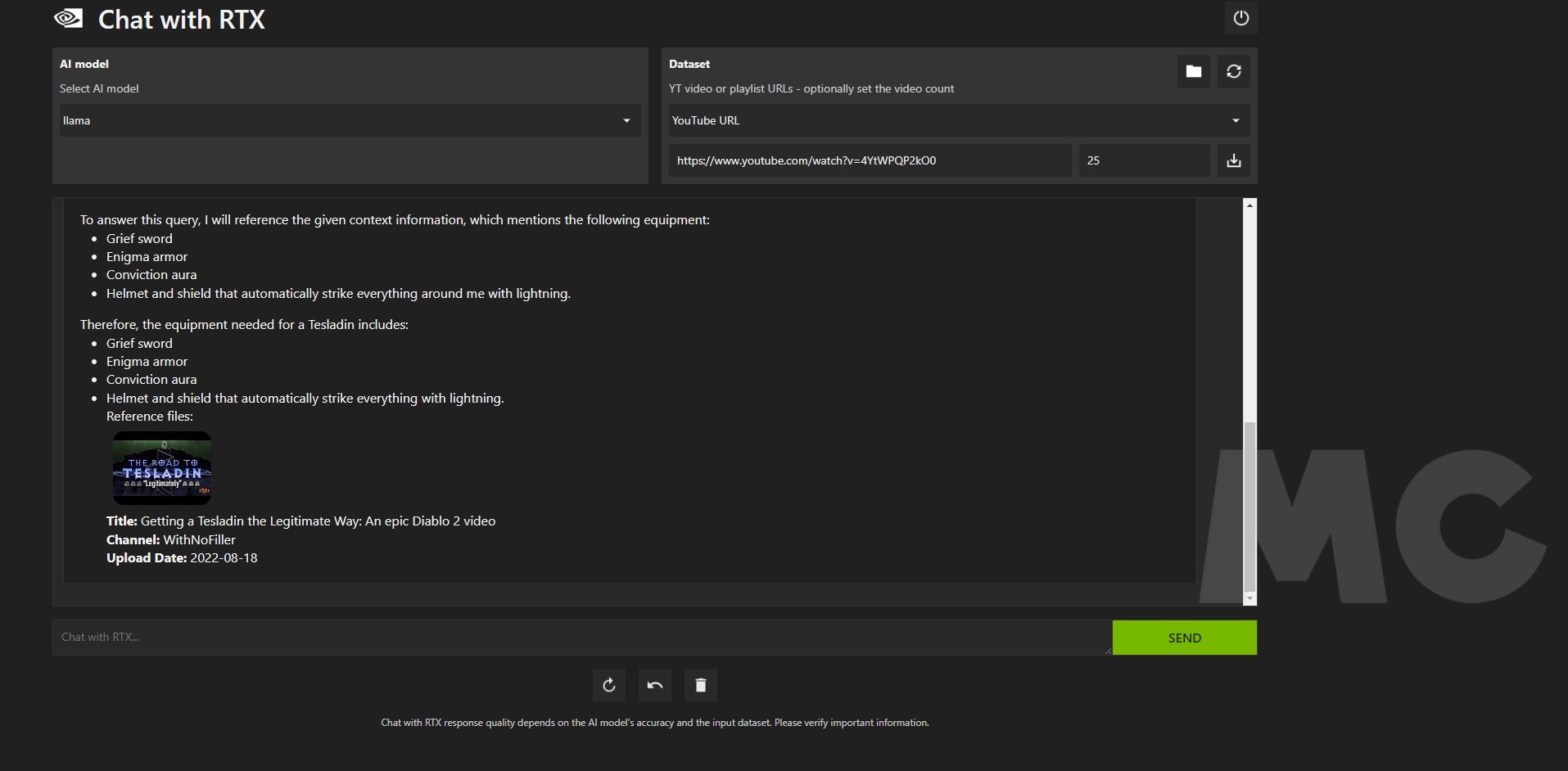

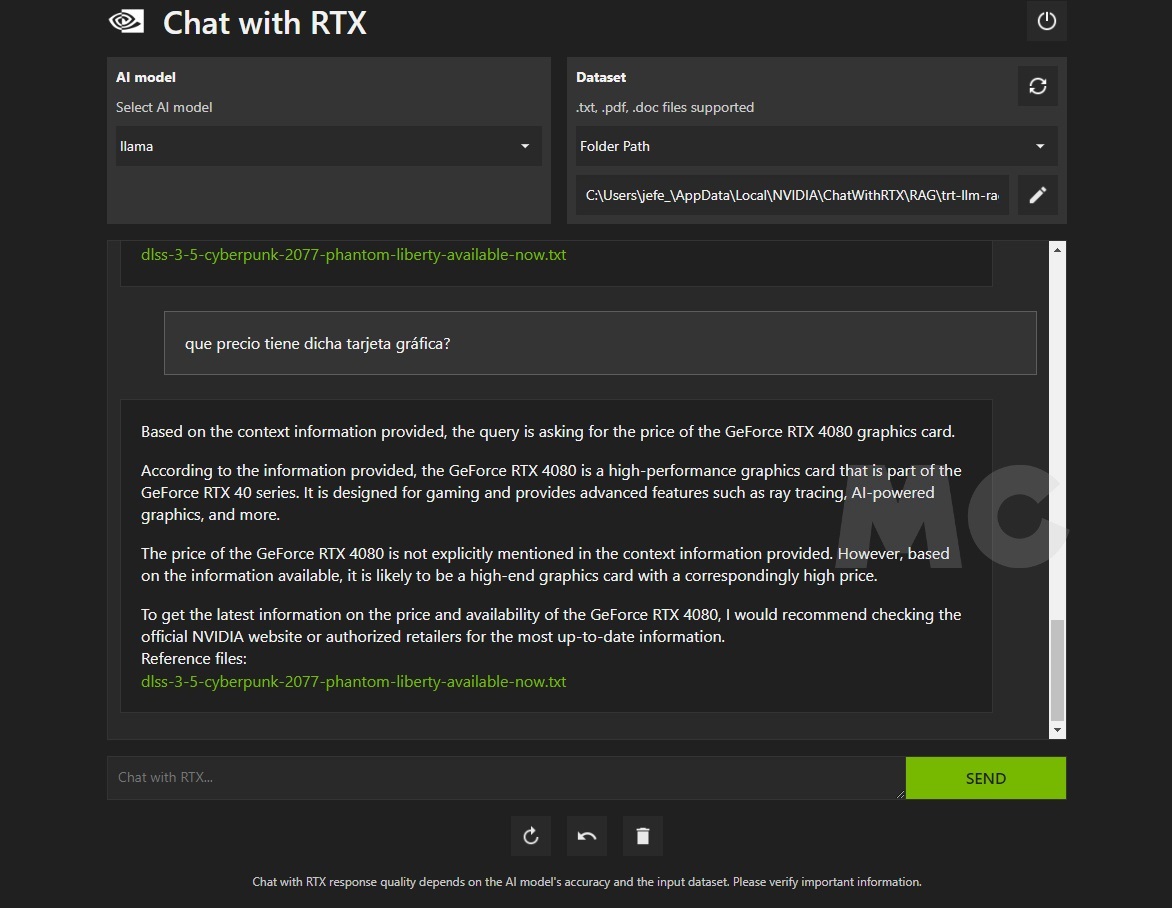

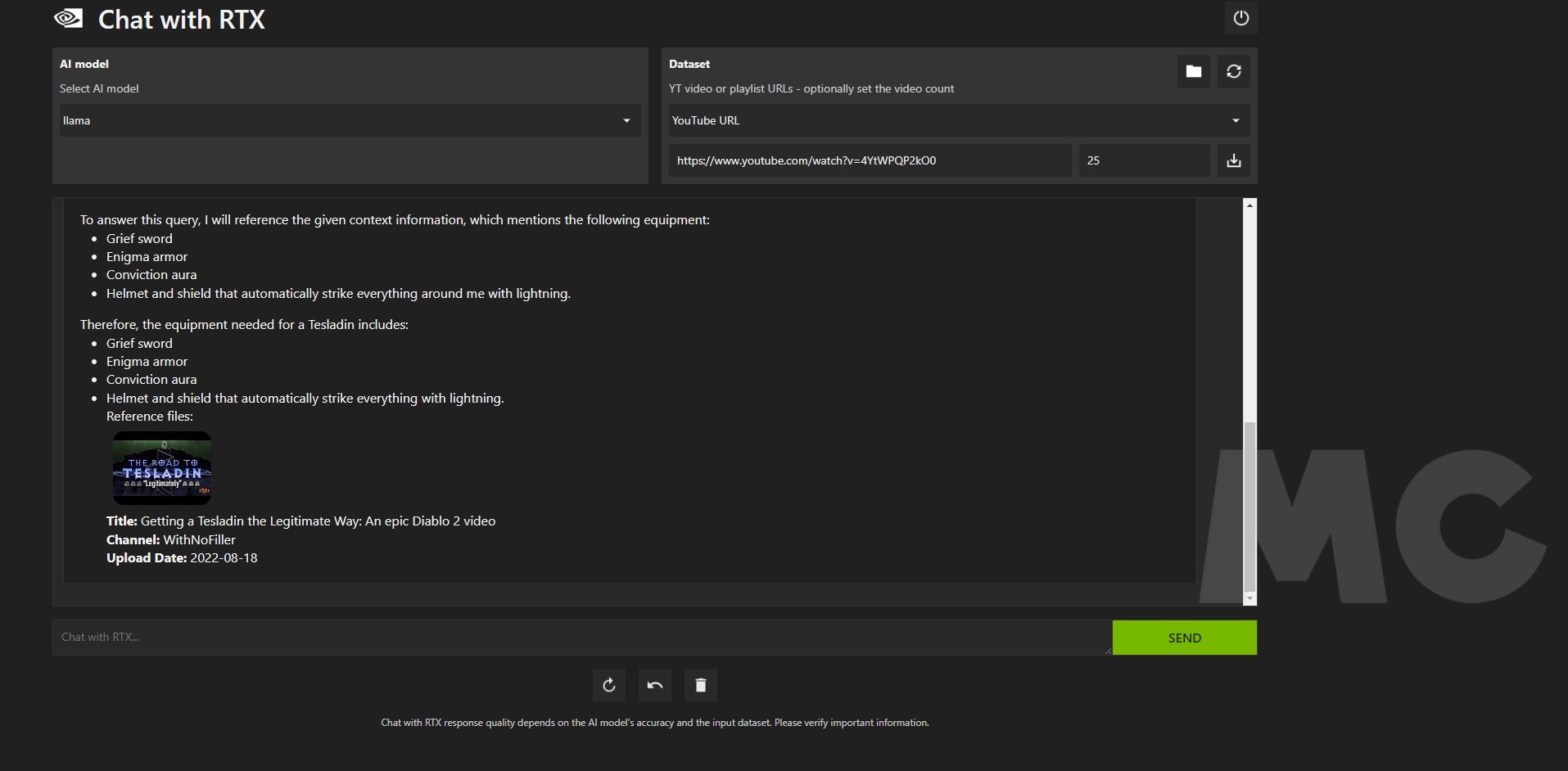

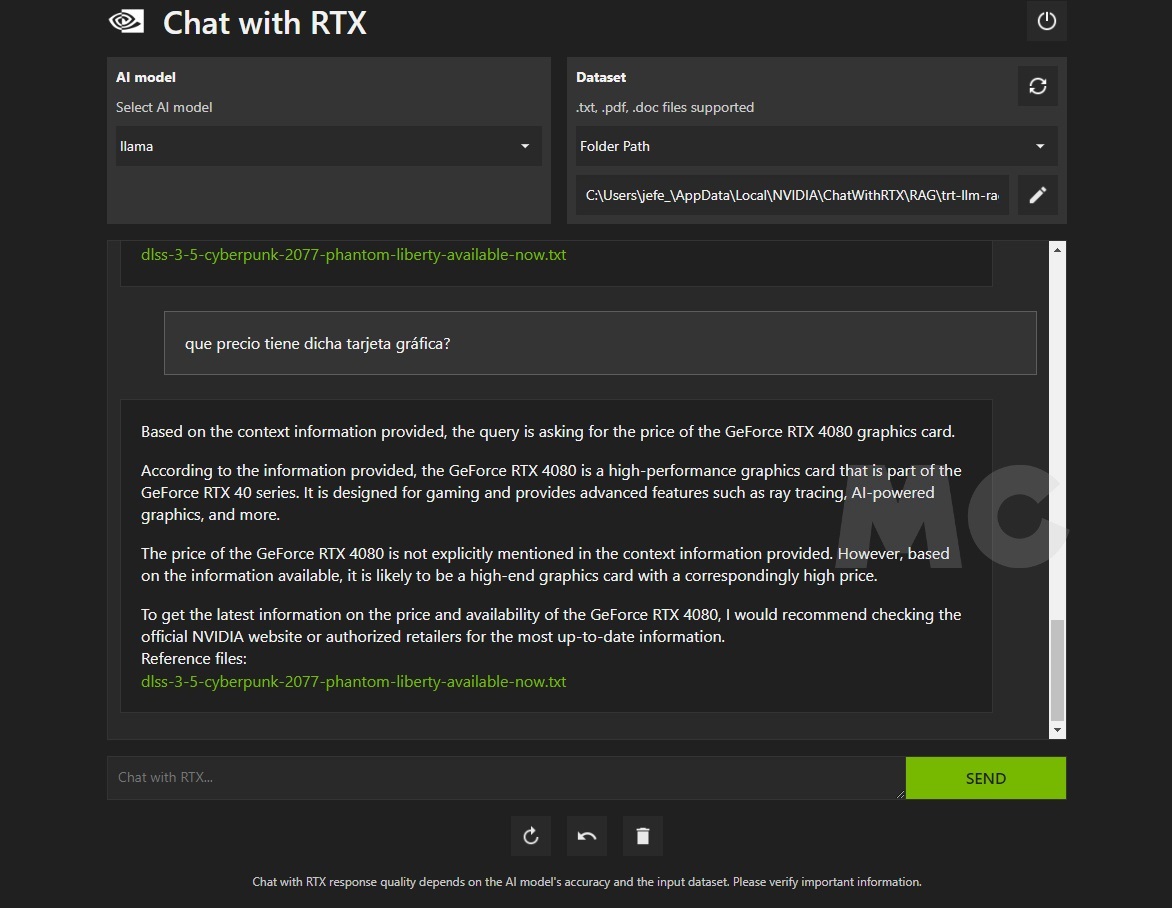

The interface is very simple, we have a where section at the bottom we can write any question or choose from designs tailored to the model with a pre-installed database. We can too switch between available AI models with a simple clickCall2 if we use a very powerful PC or Mistral if we have a more modest configuration.

In the upper right corner we can select the resource that will “feed” the AI model. This source can be the database pre-installed with Chat with RTX, and we can also switch to our own set of .txt, .PDF and .doc files, or even use links to YouTube videos and playlists.

My first contact with Chat with RTX

I wanted to spend a few hours trying this tech demo two very different teams to offer you a realistic vision of what you can expect from it, both with a very powerful hardware configuration and with a more modest and affordable one for the average consumer. On my personal PC, equipped with a Ryzen 7 7800X3D, 64 GB RAM and GeForce RTX 4090 with 24GB I was able to use the Llama2 13B graphics memory without any problems and the experience was fantastic.

The starting time with this equipment is just a few seconds We can write questions in Spanish or English in a completely natural way, make them as simple or complicated as possible, and the response time is practically immediate. Response accuracy is excellent with all available power supplies and for a one-button display.

When I used the link to a video from Diablo 2 Resurrected showing the construction of the “Tesladin”, the Paladin version, A chat with RTX was able to explain to me what equipment I should use to create the build according to the video. Yes, it’s impressive, and the previous video rewrite only took a few seconds. Its potential is beyond any doubt, the performance is excellent and under Llama2 13B it is even able to follow the conversation by context and links to previous questions.

The second computer I used to test Chat with RTX was an ASUS Vivobook Pro with an Intel Core Ultra 9-185H, 16GB of RAM and a graphics card GeForce RTTX 4060 Mobile with 8 GB of graphics memory. In this case I was limited to Mistral 7B, but performance was also excellent and the user experience remained excellent. NVIDIA has done an excellent job with this solution, which offers a very interesting value to both individuals and professionals.