Meta uses two new supercomputers to train generative AI

- March 13, 2024

- 0

Training generative AI requires computing power, and Meta reports that it has acquired a lot of additional horsepower. In this way, the company hopes to build competitive LLMs.

Training generative AI requires computing power, and Meta reports that it has acquired a lot of additional horsepower. In this way, the company hopes to build competitive LLMs.

Training generative AI requires computing power, and Meta reports that it has acquired a lot of additional horsepower. In this way, the company hopes to build competitive LLMs.

In order to train models like GPT-4 behind ChatGPT, companies need an enormous amount of computing power. Meta wants one with Llama 3 Great language model develop solutions that rival the best of OpenAI and Google. That’s why the company brought out the big guns.

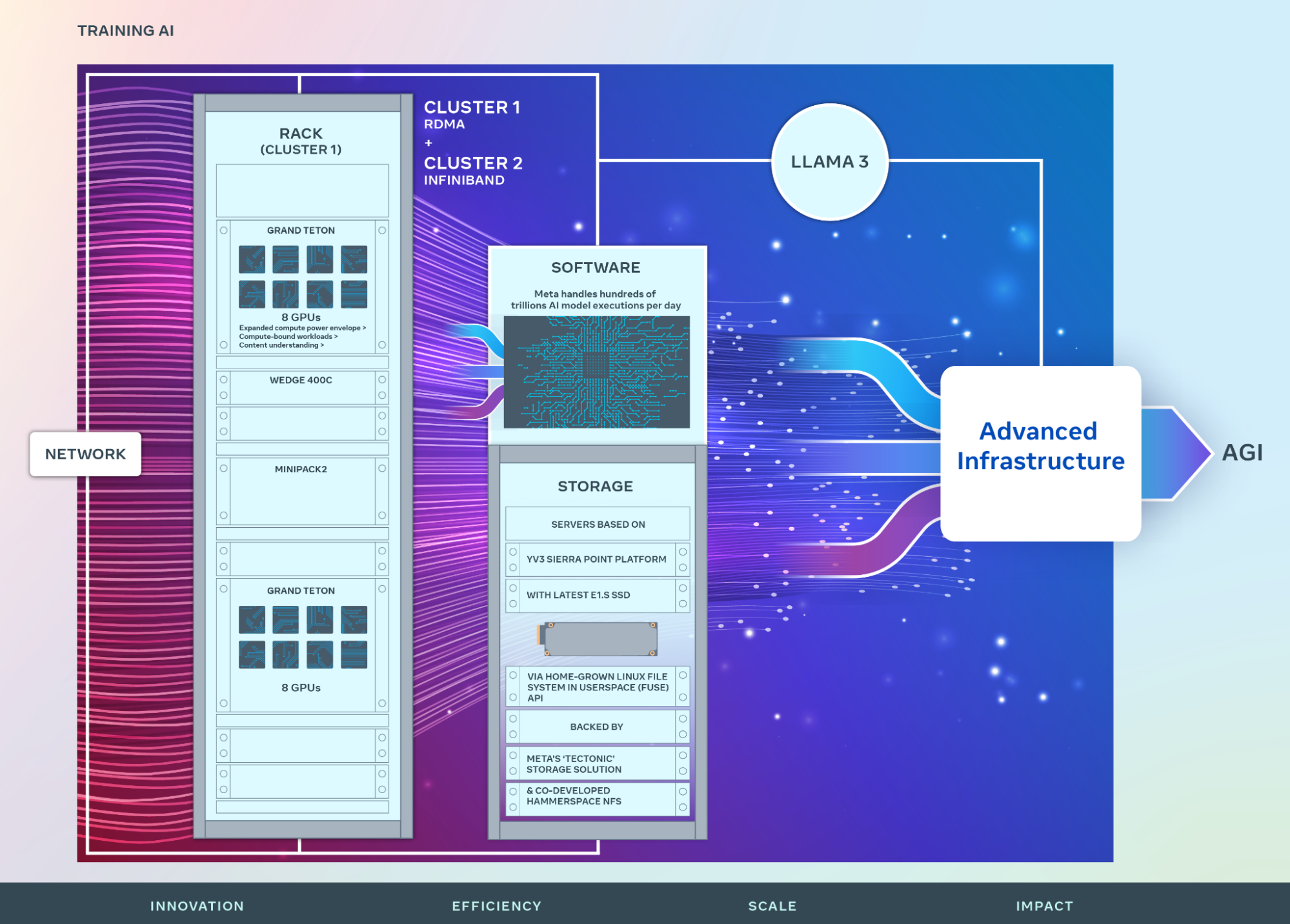

Meta claims to have built two huge GPU clusters in its own data center, each with 24,576 GPUs under the hood. In addition, Meta has purchased the most powerful chips from Nvidia: The AI supercomputers have H100 accelerators on board. The clusters are a real step forward compared to a system with around 16,000 older Nvidia A100 accelerators.

Meta has its own open GPU hardware platform that it uses to support large AI workloads. It’s called Grand Tetons. Meta combines it with its own open rack architecture, which offers more flexibility when installing the hardware.

The two new clusters are not an endpoint. Meta wants to acquire as many Nvidia H100 chips as possible and hopes to have 350,000 accelerators operational by the end of the year.

It’s unclear when Meta plans to have Llama 3 ready. The clusters will have a lot to do in the near future. When the model is ready, Meta also wants to improve existing models. In the long term, de facto supercomputers should support the development of more human-like AI.

Source: IT Daily

As an experienced journalist and author, Mary has been reporting on the latest news and trends for over 5 years. With a passion for uncovering the stories behind the headlines, Mary has earned a reputation as a trusted voice in the world of journalism. Her writing style is insightful, engaging and thought-provoking, as she takes a deep dive into the most pressing issues of our time.