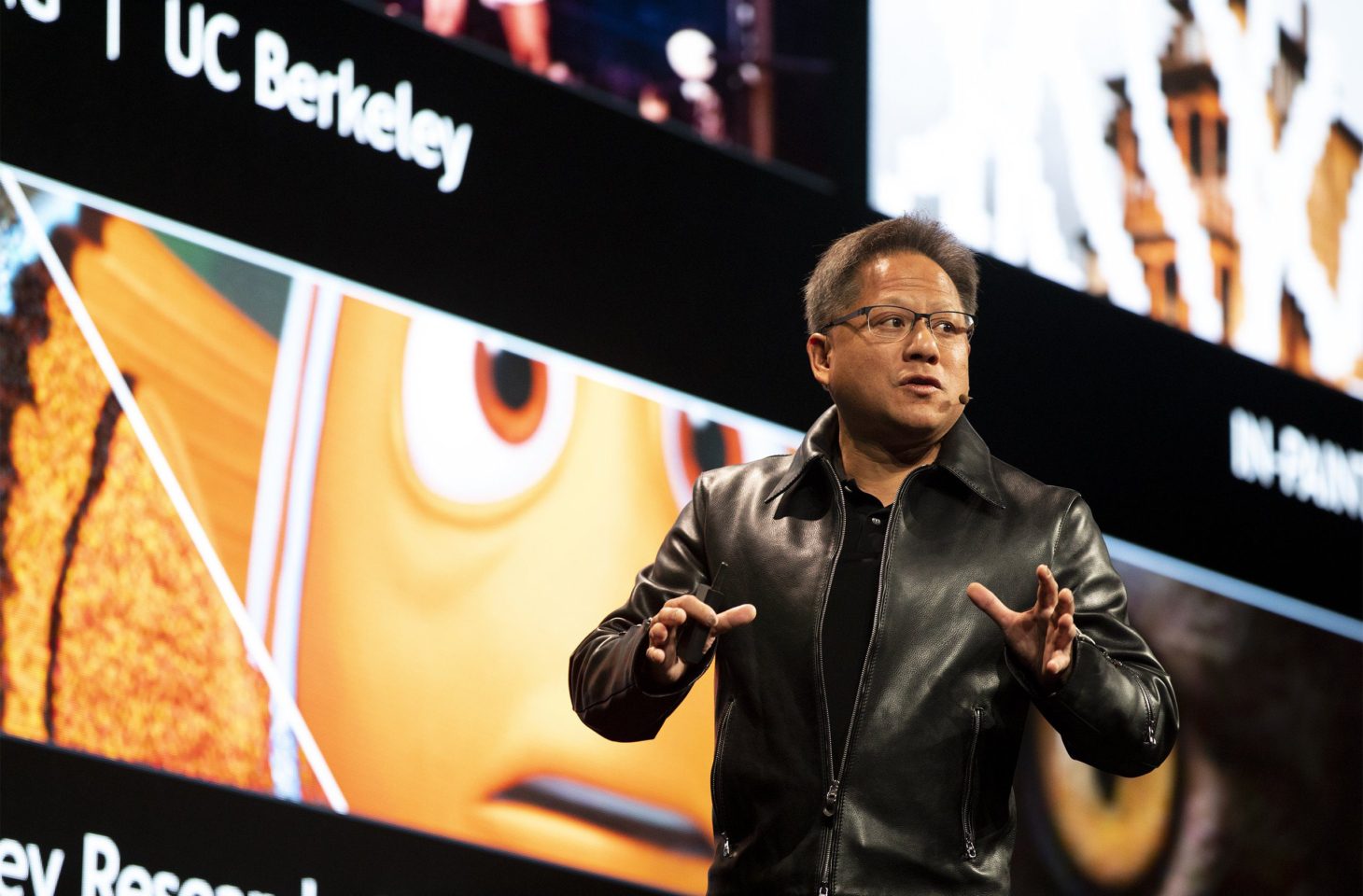

Jensen Huang told the press at the annual GTC that general artificial intelligence will reach a reasonable level in five years and that AI hallucinations are solvable.

The power of general artificial intelligence worries many people and poses questions for AI specialists. Will these models surpass human cognitive levels? According to a TechCrunch report from the GTC conference, Nvidia CEO Jensen Huang seemed ready to shed light on some AI issues.

Five years to a reasonable level of AI

Artificial intelligence could perform a wide range of cognitive tasks that approach or even exceed human levels. This is a fear that many people have and are often asked in interviews with AI experts. Although Huang is unwilling to answer such questions, he still addressed the press with his statement during the GTC conference.

“If we specify general artificial intelligence as something very specific, a set of tests on which a software program can do very well – or perhaps 8% better than most people – then I think we’ll get there within five years,” explains Huang. Additionally, he notes that predicting when we will have reached reasonable general AI depends on how you define the concept.

AI hallucinations

An AI hallucination means that AI models sometimes provide answers that seem plausible but are not based on facts. When Huang was asked whether these hallucinations can be solved, he simply pointed out that one can detect hallucinations if one examines the answers carefully. “For every answer, you have to look up the answer,” Huang says. You should compare the facts in the source with known truths. If the answer is factually incorrect, delete the entire source and build on the next part.

Finally, Huang suggests that for business-critical answers, such as: B. Health advice, checking multiple and known sources of truth might be the way to go.