Google created an AI to check other AI’s answers

- April 1, 2024

- 0

Google has developed an artificial intelligence system “evaluation of facts based on search results » (Search Augmented Reality Evaluator, SAFE), whose task is to find errors in the

Google has developed an artificial intelligence system “evaluation of facts based on search results » (Search Augmented Reality Evaluator, SAFE), whose task is to find errors in the

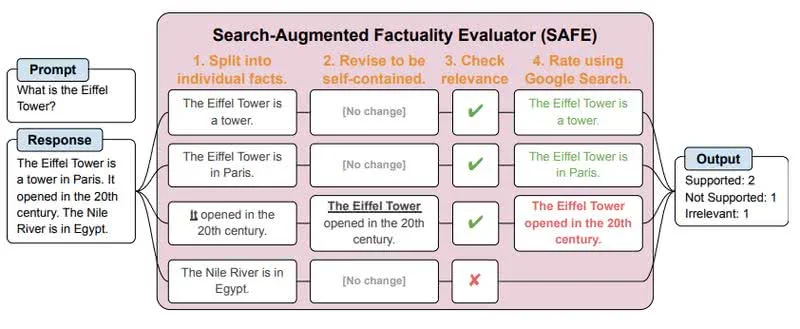

Google has developed an artificial intelligence system “evaluation of facts based on search results » (Search Augmented Reality Evaluator, SAFE), whose task is to find errors in the responses of services based on large language models (LLM), such as ChatGPT.

MAs are used for a variety of purposes, from writing scientific papers, but they are often wrong, provide unreliable information, or even insist on their truth (hallucinate). The new development of the Google DeepMind team separates specific facts from the output of the neural network, creates a query to the search engine and tries to find confirmation of the presented information.

During testing, SAFE checked 16,000 responses from a variety of services based on large language models, including Gemini, ChatGPT, Claude and PaLM-2, and then researchers compared the results to the findings of people who did this manually. SAFE’s findings were 72% consistent with human opinions, and when analyzing the differences, it turned out that the AI found the truth 76% of the time.

The SAFE code is published on GitHub and is available to anyone who wants to check the reliability of LLM answers.

Source: Port Altele

As an experienced journalist and author, Mary has been reporting on the latest news and trends for over 5 years. With a passion for uncovering the stories behind the headlines, Mary has earned a reputation as a trusted voice in the world of journalism. Her writing style is insightful, engaging and thought-provoking, as she takes a deep dive into the most pressing issues of our time.