Opera already supports executing AI locally

- April 4, 2024

- 0

The interest in artificial intelligence shown by the people responsible for Opera was undoubtedly sufficiently accredited in the past year. And he wasn’t the first to make this

The interest in artificial intelligence shown by the people responsible for Opera was undoubtedly sufficiently accredited in the past year. And he wasn’t the first to make this

The interest in artificial intelligence shown by the people responsible for Opera was undoubtedly sufficiently accredited in the past year. And he wasn’t the first to make this leap, something Edge did with the presentation of the new Bing, but he was the first to respond to the aforementioned move by those from Redmond, announcing the integration of ChatGPT in his sidebar less than a week later. after Microsoft’s announcement.

About a month later, they started deploying the first AI integration into the browser, and already in the middle of last year, the long-awaited integration debuted in the browser. sidebar. But it doesn’t stop there, because Opera announced at the end of January that it is working on a special version of its browser for iOS, in which it actually the integration of artificial intelligence will be much deeperto the extent that it will be part of the application itself.

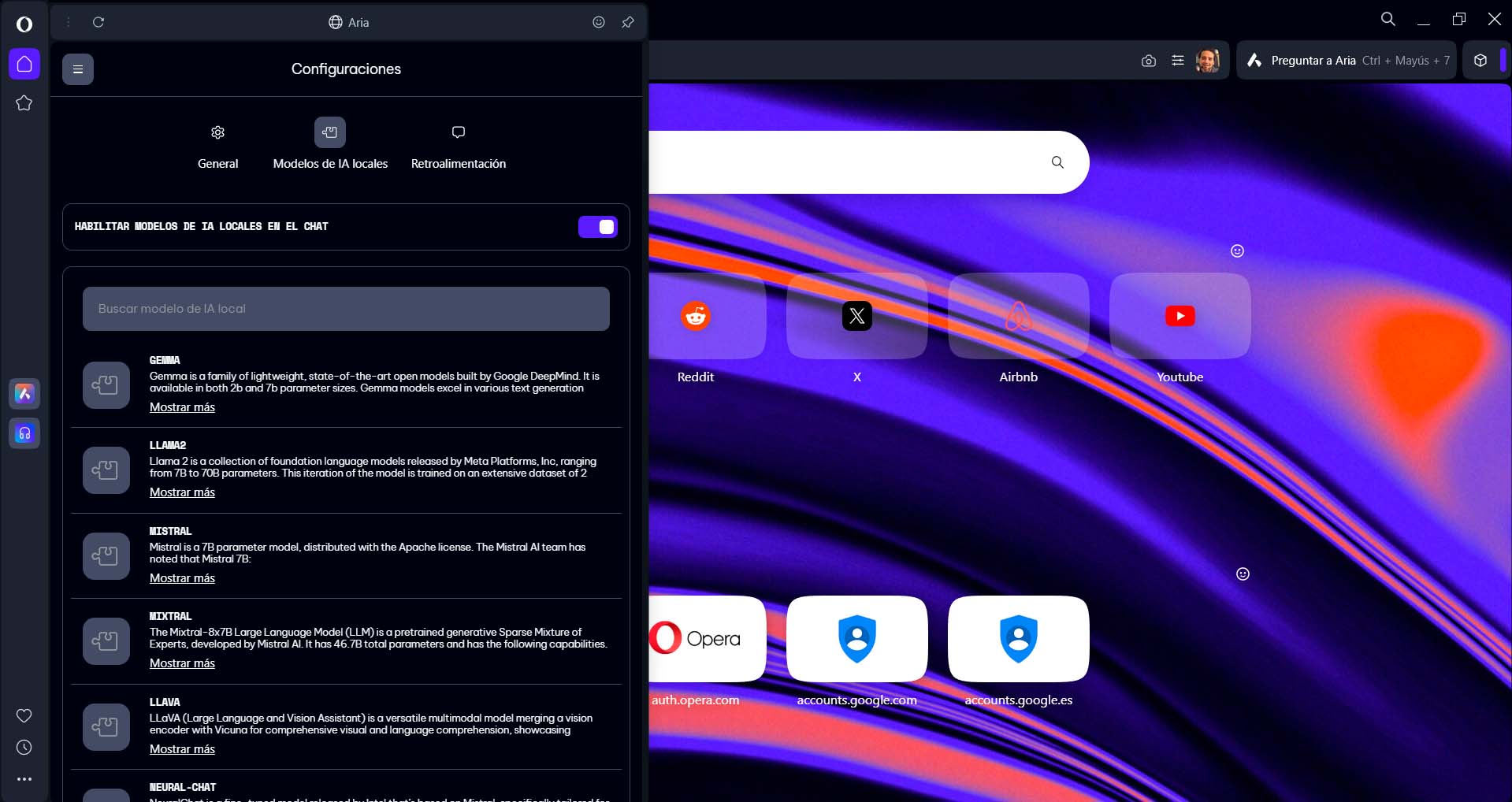

Well, now we find ourselves with one more step in that direction, and we’re not talking about less movement because Opera is already testing the use of LLM models locally. Yes, you read that right, the intention of those responsible for the browser is to complement Aria, which runs in the cloud and has been available there for some time, by downloading and running locally a wide set of AI, which of course gives us all the advantages of being independent from the cloud.

This feature is currently available in the developer version of Opera One, which you can download from this link. After installation and after opening Aria (with the button with A shown on the left sidebar), you will only need to log in with your Opera account (this requirement is necessary). You will then see a button at the top of the sidebar that says “Select Local AI Model” which will take you to the settings to download the model (or models) you want to use, from a very wide range.

Once you’ve downloaded and installed a model in Opera, you can start chatting with it, and if you’ve installed more than one, a selector will appear in the same section where you can choose the one you want to use at any time. time. You have to keep two things in mind, yes. The first is that, as you may have already guessed from the version of Opera you had to download, we have a feature that is still in the testing phase, so its operation may be erratic. And the second thing is that as we already told you in the tutorial on LM Studio, Doing an LLM locally is quite a demanding activityso not all systems can handle it.

Source: Muy Computer

Donald Salinas is an experienced automobile journalist and writer for Div Bracket. He brings his readers the latest news and developments from the world of automobiles, offering a unique and knowledgeable perspective on the latest trends and innovations in the automotive industry.