AI has influenced the development of AMD APUs and Intel processors

- April 9, 2024

- 0

And its impact will increase as a result increasing energy needs AI, which will cause the NPU to accept increasingly complex designs and take up more space at

And its impact will increase as a result increasing energy needs AI, which will cause the NPU to accept increasingly complex designs and take up more space at

And its impact will increase as a result increasing energy needs AI, which will cause the NPU to accept increasingly complex designs and take up more space at the silicon level in AMD APUs and next-generation Intel processors. Intel already made it very clear when they confirmed the requirements for Microsoft Copilot to work locally, that we would need a 40 TOP performance NPU.

Today, the most powerful NPU to accompany an x86 processor is the one used by AMD in its Ryzen 8040 APUs, which achieves 16 TOP performance. This number is far from the minimum requirement of 40 TOP, and according to AMD itself, it will be multiplied by three in its next generation APU, known as Strix Point. This means they will have NPUs capable of offering the power of 48 TOPs.

NPU became necessary because according to Microsoft itself, it is the most efficient way to move Copilot locally due to good performance and low consumption. However, the introduction of this component it is priced at the level of the silicon space which will only increase over time and which obviously forced AMD and Intel to change and adjust the development of their solutions.

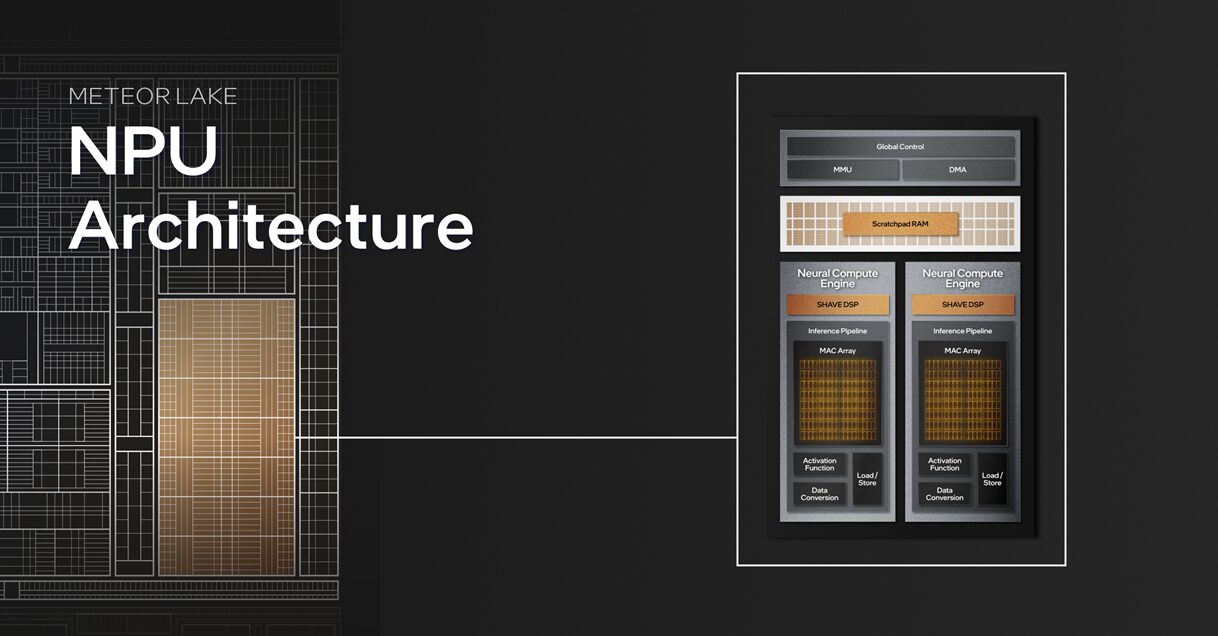

In the case of Intel, we already know that the company has adopted a fully disaggregated multi-block design, in which we have a total of four blocks divided into CPU, GPU, IO and SoC. The NPU is located in the SoC block, and occupies a remarkable space at the silicon level. The same happens with the AMD Ryzen 7040 and 8040 APUs, although these use a monolithic core design and They integrate the NPU into a single silicon chip.

Both Intel and AMD had to incorporate NPUs sacrifice considerable space at the silicon level which they could use for other things like more CPU cores, more powerful GPU or a more buffer memory.

In the case of AMD’s Strix Point APUs, these are said to have had a completely different design in their earliest stages, as they weren’t originally supposed to have a high-performance NPU. Thanks to this Your CPU and GPU will be more powerfuland should have had a larger amount of cache.

Those of you who read us daily already know how important space is at the silicon level. Its use is the key to achieving increasingly powerful solutions and Prioritizing certain components makes it possible to create specialized processors and APUs in various tasks. A good example is the Ryzen 7000 with 3D stacked cache, which overcomes the lack of space at the silicon level (in the CPU chiplet) to vertically mount an extra block of L3 cache, making them dedicated gaming solutions.

By introducing NPU, we are shaping specialized AI solutions, but at the same time we are giving up improving other things in the APU or processor. This explains why Intel’s Meteor Lake processors arrived with a lower maximum core and thread count than Raptor Lake. It is clear that the trend will not change, which means that we will see less remarkable advances in CPUs and GPUs in favor of advances in AI through the more powerful and larger NPUs that will soon be present in AMD APUs and Intel processors.

AI generated cover image.

Source: Muy Computer

Donald Salinas is an experienced automobile journalist and writer for Div Bracket. He brings his readers the latest news and developments from the world of automobiles, offering a unique and knowledgeable perspective on the latest trends and innovations in the automotive industry.