Meta presents Llama 3, the next generation of its LLM

- April 20, 2024

- 0

LLM War adds a new movement with the introduction of Llama 3, the second major evolution of the Meta language model, which will also play a key role

LLM War adds a new movement with the introduction of Llama 3, the second major evolution of the Meta language model, which will also play a key role

LLM War adds a new movement with the introduction of Llama 3, the second major evolution of the Meta language model, which will also play a key role in some technology services, as it will be the model used to integrate artificial intelligence functions into them. In this regard, however, we will have to wait for the pace of their deployment, especially in the European Union.

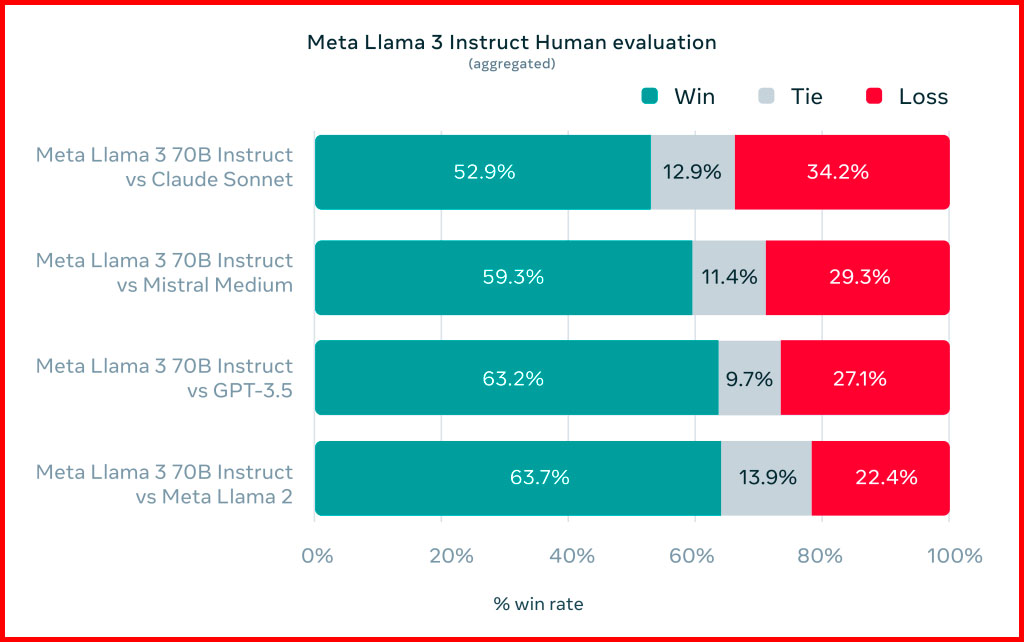

Initially, for its launch, Meta announced two versions of Llama 3, version 8B (8,000 million parameters) and version 70B (70,000 million parameters), although the company had already announced the future arrival of a much more powerful version, the 400B, which, as you already deduced, adds up to 400,000 million parameters. As we said in our LM Studio tutorial, remember that a parameter is equivalent to a neural connection in a neural network. That is why we are talking about an incredibly high density for the future 400B model.

These are not the only future plans that Meta is proposing with regard to Llama 3, because as we read in the official statement, they also have plans for further improvements such as adding new features, larger pop-up windows, performance improvements and more. And here I find the popup particularly interesting because let’s remember that with Gemini 1.5 Google managed to expand it to one million tokens and that they also said they reached ten million tokens in their tests.

For llama training 3, The meta claims to have used a whopping 15 billion+ tokens (15T), all from public sources according to the company, of which just over 5% were not in English. We’ve read about them being quality data that covers just over 30 languages, so their answers are expected to be correct in those, though probably not as much with English operations. To give a little context, recall that the LLM announced by the Government of Spain will be trained with 20% to 25% of data in Spanish and other co-official languages of the state.

Regarding its availability, Meta said that Llama 3 will arrive soon (no specific date) to multiple environments, from professional cloud platforms to Hugging Face repositories, and which will also have a web version of meta.ai, with which users of the company’s services in certain countries (among those who, for example, cannot now find ours), they will be able to use it in a similar way that Copilot users use GPT-4.

Source: Muy Computer

Donald Salinas is an experienced automobile journalist and writer for Div Bracket. He brings his readers the latest news and developments from the world of automobiles, offering a unique and knowledgeable perspective on the latest trends and innovations in the automotive industry.