Snowflake expands AI offering with additional Nvidia functionality

- June 4, 2024

- 0

Snowflake integrates solutions from the Nvidia AI Enterprise Suite into its own Cortex AI solution. No data without AI, and no AI without Nvidia. “Nvidia” has been the

Snowflake integrates solutions from the Nvidia AI Enterprise Suite into its own Cortex AI solution. No data without AI, and no AI without Nvidia. “Nvidia” has been the

Snowflake integrates solutions from the Nvidia AI Enterprise Suite into its own Cortex AI solution.

No data without AI, and no AI without Nvidia. “Nvidia” has been the must-have for every technology specialist organizing an event for more than a year, and Snowflake is no exception. At the Snowflake Summit in San Francisco, the company will further integrate Nvidia software and hardware with its own Cortex solution and Arctic LLM.

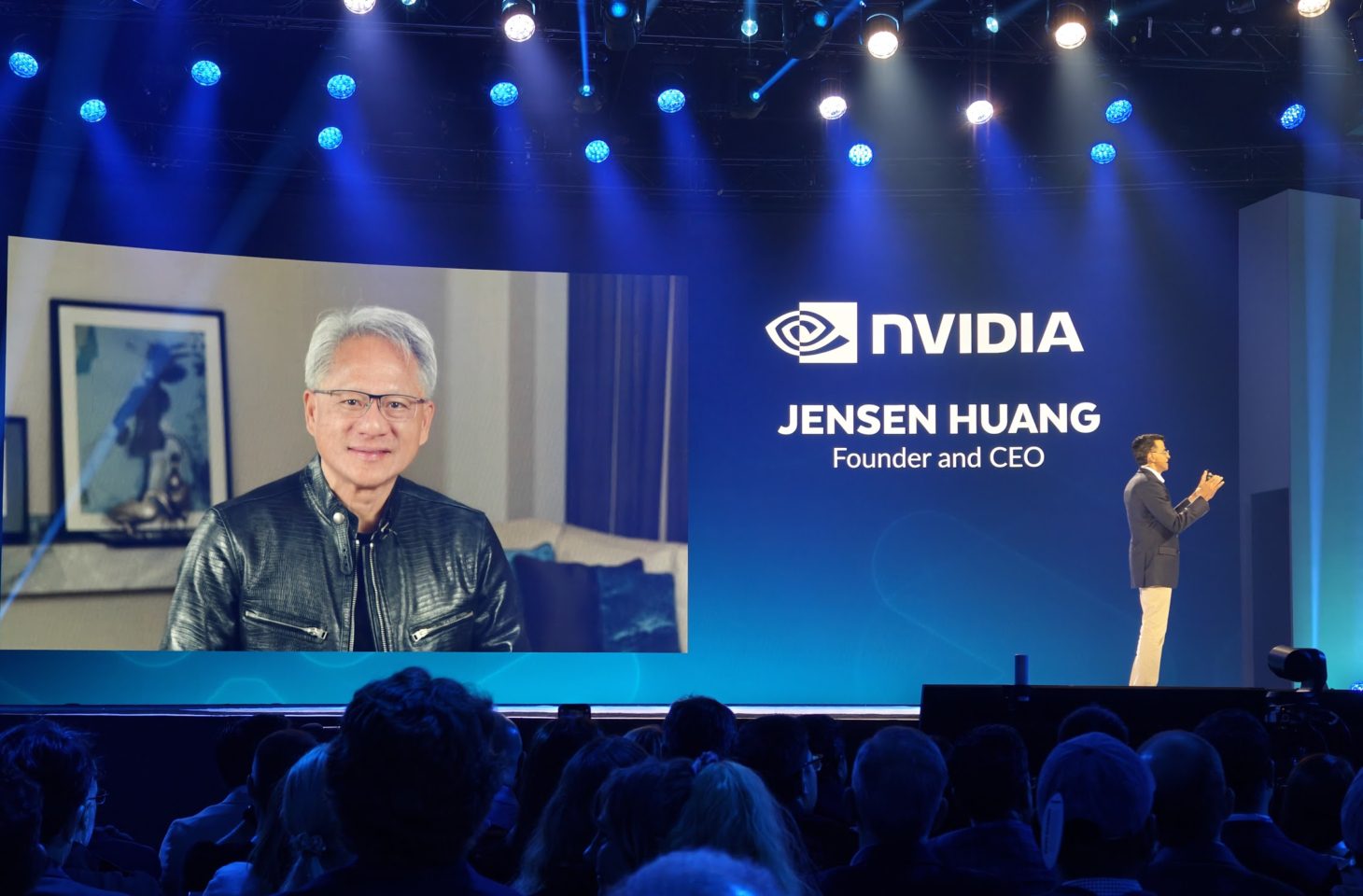

The integration of Nvidia into the Snowflake ecosystem was big news at last year’s summit. Nvidia CEO Jensen Huang signed a gift and said: The combination of Snowflake’s data cloud with Nvidia’s AI capabilities has a lot of potential. At this year’s summit, both parties will further expand this collaboration.

Snowflake, for example, integrates Nvidia AI Enterprise features into Cortex AI. Cortex AI is a layer between AI functionality and data that is designed to make it easier for users to use both their own AI solutions and ready-made AI functionality for their data. Two integrations are in focus.

Cortex AI works with Nvidia NeMo Retriever. This ensures fast and efficient access to the right data Retrieval expandedGeneration, where prompts to an LLM are annotated with proprietary data for more accurate results. “This is a major announcement,” said Nvidia CEO Jensen Huang on the keynote’s jumbotron, calling in directly from Taipei. “We now have the ability to chat with your corporate data. NeMo Retriever is a computing engine that we’ve been working on for years.”

If you want something a little faster, you can now run your AI inference apps in Snowflake on an Nvidia Triton inference server. “How quickly and efficiently you can generate is the factor that drives down the cost,” says Huang.

Cortex AI is a serverless AI implementation for Snowflake customers, which in part means that you as a customer don’t have to worry about the underlying hardware. On the other hand, specialized servers like a Triton server are more efficient (but also more expensive). Snowflake now gives users a choice in what powers their AI workloads.

Snowflake will also integrate Nvidia NIM into its own platform. Nvidia introduced NIM at GTC 2024, describing the solution as a set of optimized cloud-native microservices designed to accelerate time to market and simplify deployment of generative AI models anywhere, across cloud, data center, and GPU-accelerated workstations. In summary, you can safely invoke NIM inference microservices.

Snowflake users can now get started with NIP in Snowflake as native apps within Snowpark Container Services. These containers run within the customer’s Snowflake account, so data remains protected as always. Finally, robust data control in a zero-copy environment remains core to all Snowflake development.

“We are bringing GenAI into the data,” Huang adds. “We used to transfer the data to the computer over the network. The amount of data has become so large that it has become easier to calculate the data.”

Finally, we discuss the Arctic LLM. Snowflake launched Arctic earlier this year. Arctic competes with GPT-4 and other LLMs and is designed for enterprises focused on data control. Inference using Arctic is present in Nvidia NIM (which is included in Snowpark).

For years, Snowflake has called itself “the data cloud company,” a description that refers to the fact that the company doesn’t simply offer data warehouse or lake functionality. Snowflake’s power lies in the simplicity with which you can leverage internal data in a secure way, but also in the way you can make that data available to third parties or combine it with relevant external data sources. This year, that’s no longer enough, and Snowflake is calling itself “it.” AI “Data cloud company”. No AI without data and no data without AI: the addition of the two letters speaks volumes.

Source: IT Daily

As an experienced journalist and author, Mary has been reporting on the latest news and trends for over 5 years. With a passion for uncovering the stories behind the headlines, Mary has earned a reputation as a trusted voice in the world of journalism. Her writing style is insightful, engaging and thought-provoking, as she takes a deep dive into the most pressing issues of our time.