Nvidia CEO: “AI doesn’t care where it goes to school”

- June 6, 2024

- 0

Jensen Huang, CEO of Nvidia, believes we should build more data centers in locations that are not currently used to train AI models. During Computex, Nvidia is the

Jensen Huang, CEO of Nvidia, believes we should build more data centers in locations that are not currently used to train AI models. During Computex, Nvidia is the

Jensen Huang, CEO of Nvidia, believes we should build more data centers in locations that are not currently used to train AI models.

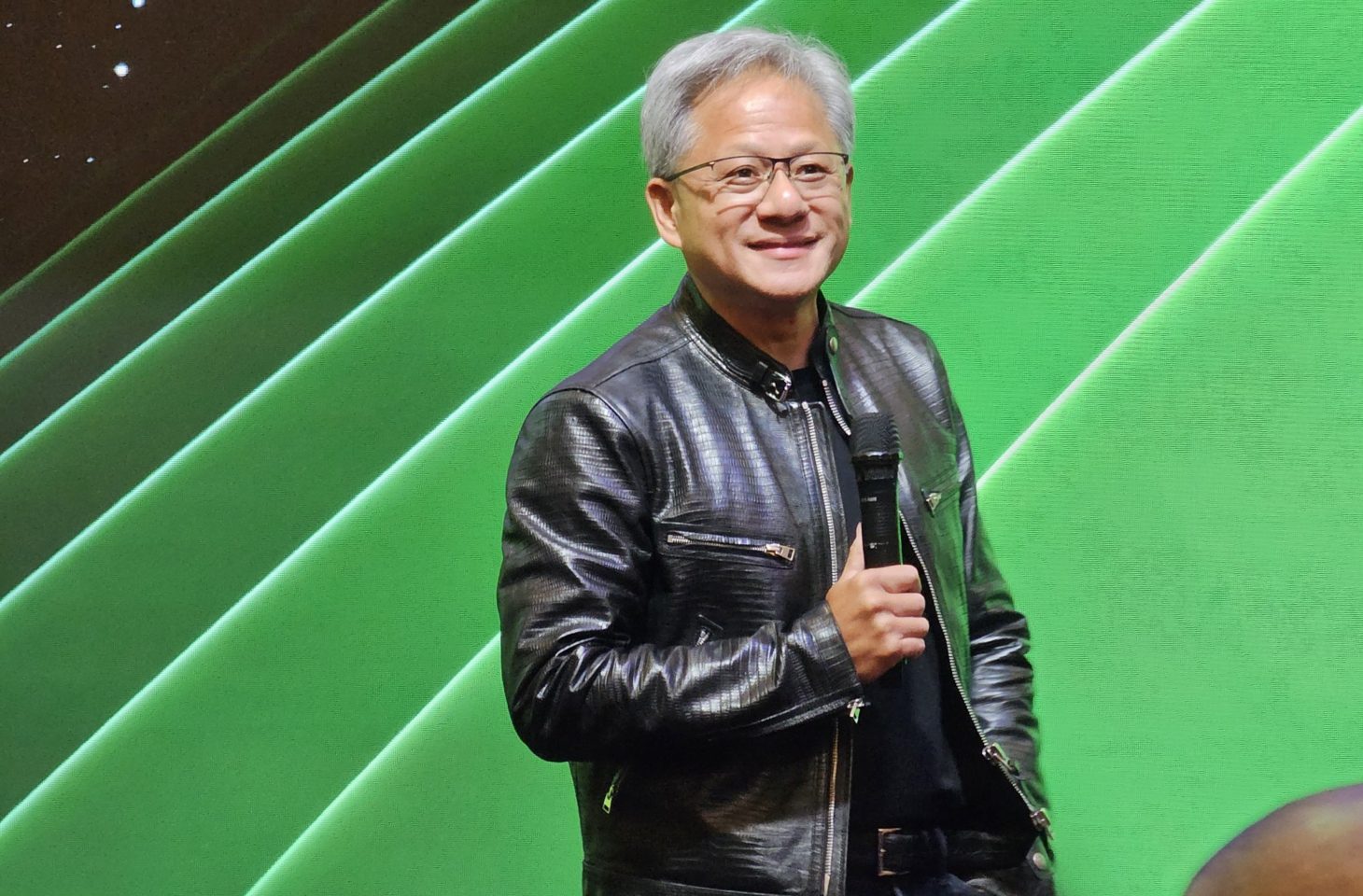

During Computex, Nvidia is the only speaker, and Nvidia CEO Jensen Huang is received like a rock star. During a question and answer session, one of the journalists even calls out to him, “We love you,” which is met with laughter.

Whenever a question is asked about sustainability, we notice that Huang wants to provide clarity. He knows full well that his new chips require more and more energy. The latest Blackwell B200 chip requires a whopping 1,200 watts of power. Nevertheless, he finds a clear answer.

“AI doesn’t care where it goes to school. The world doesn’t have enough energy available close to the population.” He points to the ever-decreasing energy surpluses in big cities like Amsterdam or Taipei, where new companies can no longer get an energy connection.

“At the same time, there is a lot of excess energy in the world. The sun can provide enormous amounts of energy. The only disadvantage is that this happens in places where no people live. We need to build data centers and power plants to train AI there.”

Training an AI model via deep learning requires the most energy expenditure. While training is running, you hardly need any external connectivity and latency is not an issue. Once the data is available, training can begin. This is repeated continuously until the model is intelligent enough.

The end result is an LLM (Large Language Model) such as GPT-4, LLaMA 2, Claude or Gemini.

“Today, this training is accelerated via GPUs, which is much more energy efficient than a traditional CPU. A GPU can perform accelerated acceleration up to 99 percent more efficiently than a CPU. That alone is a huge energy cost savings. That’s why we have tools like ChatGPT today. A CPU cannot train these models in a timely and efficient manner.”

In addition to new locations for data centers, especially for training AI models, the Nvidia CEO sees plenty of room for further development Inference-side. This is the side where the model is put into practice after training is complete. For example, every question you ask ChatGPT requires a conclusion.

“Generative AI is not about training, but about inference. Many things are not sufficiently optimized today. Take our self-developed weather simulation tool in Taiwan. With our hardware on the inference side, it runs 3,000 times more efficiently than before. “Accelerating computing power is the key word here.”

“Don’t think about training, think about conclusions. This is where the big energy gains can be made, which makes the whole thing more sustainable.”

He doesn’t mention that the additional computing power means you can accelerate even further, which pushes the boundaries even further and results in higher energy consumption.

Source: IT Daily

As an experienced journalist and author, Mary has been reporting on the latest news and trends for over 5 years. With a passion for uncovering the stories behind the headlines, Mary has earned a reputation as a trusted voice in the world of journalism. Her writing style is insightful, engaging and thought-provoking, as she takes a deep dive into the most pressing issues of our time.