GenAI: On-Prem or in the Cloud?

- July 18, 2024

- 0

Generative AI was born in the cloud, but whether it will mature there is far from certain. On-prem providers see their opportunity to get in on the action.

Generative AI was born in the cloud, but whether it will mature there is far from certain. On-prem providers see their opportunity to get in on the action.

Generative AI was born in the cloud, but whether it will mature there is far from certain. On-prem providers see their opportunity to get in on the action. Where does GenAI work best for your business?

The GenAI hype is now entering its second year and there is no end in sight. By 2024, generative AI will develop from an experimental toy into a technology that offers companies real added value. Almost all players in the tech industry agree on this. 65 percent of companies are currently actively working on GenAI, McKinsey concluded after a global survey.

Companies are already preparing to go one step further with integrating GenAI into their daily processes, but obstacles remain. One of the questions that many companies still don’t have a clear answer to is how they will implement the technology. This “how” question is also a “where” question: Where will GenAI run, on-premises or in the cloud?

GenAI is the next step in the evolution of artificial intelligence that has already been going on for several decades. The so-called “classical AI” is programmed to be good at a specific task, such as processing large amounts of data. Generative AI is characterized by its creative spirit of producing content based on a question in natural language, from text to images.

GenAI’s versatility comes from the complexity of its underlying models. LLMs like GPT-4 have sifted through terabytes of data to learn to find patterns and structures in unstructured data. A model’s ability to do this is expressed in the number of parameters, with more often being better. GPT-3.5, for example, has 350 million parameters, while its successor GPT-4 has a billion.

LLMs are getting bigger and bigger to achieve better performance. This comes at a high price, literally: sourcing LLMs requires powerful and expensive resources. Nvidia’s most powerful GPUs cost tens of thousands of dollars each, and data centers must continually expand to support the growth of AI models, not to mention the energy costs.

The price of GenAI can easily reach billions of dollars. Few companies can or want to bear the costs. It is no coincidence that the most powerful GenAI applications are directly or indirectly in the hands of the large hyperscalers.

The cradle of GenAI therefore lies in the public cloud. These large, complex models can easily be made accessible to a large audience via the cloud. To use ChatGPT, all you need is a laptop or smartphone with an internet connection.

Despite easy access to GenAI tools, many business leaders are putting the brakes on, the Boston Consulting Group notes. The fear stems mainly from misunderstandings about how the technology works and where AI gets its knowledge from. The quality of the results of AI systems is being questioned.

These concerns are not unfounded: GenAI makes mistakes, no matter how many parameters a model has. So-called “hallucinations” are often the result of errors or distortions in the training data, which lead to the LLM coming up with inaccurate or even completely nonsense answers. It becomes problematic when users believe everything that GenAI tells or presents to be true.

The versatility of GenAI systems is a strength, but specific knowledge is sometimes required in certain application situations. An LLM is not always the best solution, so many models can be fine-tuned to better focus the output on specific use cases.

There’s another problem: fine-tuning models requires sharing data with the models. Business leaders aren’t always willing to send internal company data to the cloud, especially when models reproduce that data for other users. You can (largely) solve that with a paid subscription, but wouldn’t it be more convenient if the AI model came to your company and stayed there?

The data dilemma surrounding GenAI presents opportunities for companies that want to give on-prem IT some love. Companies like Dell, Nutanix and HPE have developed solutions to run GenAI models in a “cloud-like” way on local infrastructure, with Nvidia having a (great) feel for everything. Such solutions often require the purchase of servers with one or more Nvidia GPUs.

Han Tan, COO Hybrid Cloud at HPE, explains why GenAI would also feel at home in an on-prem environment during HPE Discover. “It’s a misconception that AI has to be in the public cloud. Once you’ve run the models, you need to refine them with your own data, which many companies have on-premises. At some point, the AI has to come to the data.”

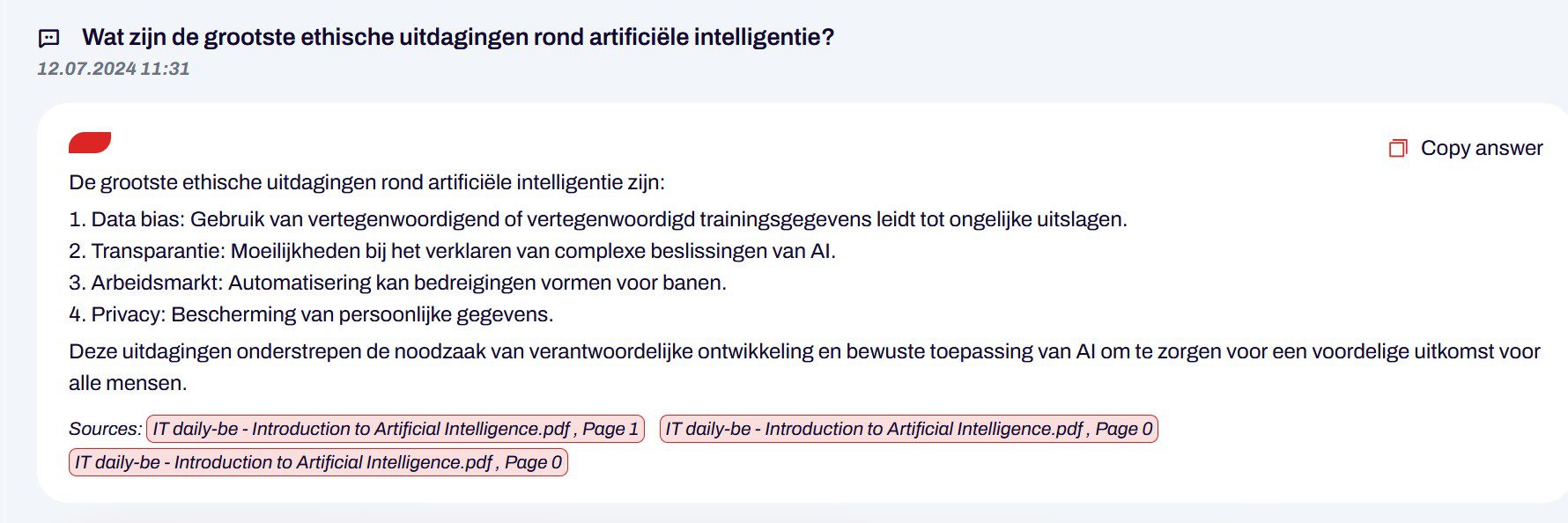

Fujitsu recently launched a GenAI tool for local use with Private GPT. During a live demo Chief Data Officer Udo Würtz also cites the data argument as the main reason for keeping GenAI on-site as much as possible. “AI offers a great opportunity to share knowledge within the organization, but it must stay within the organization. Private GPT protects your intellectual property because the data does not leave the data center.”

Companies can request a test drive with Private GPT from Fujitsu. Würtz takes us on such a test drive. With the Private GPT solution, Fujitsu provides the software and application layer, which is then installed on the customer’s infrastructure.

Of course, a model is also needed: The open source model Mixtral 8x7B, developed and trained by Mistral, was chosen for this. “The special thing about this model is that it consists of eight ‘experts’. For your specific question, the model names two ‘experts’ and combines the results to get the best answer,” says Würtz, explaining the choice.

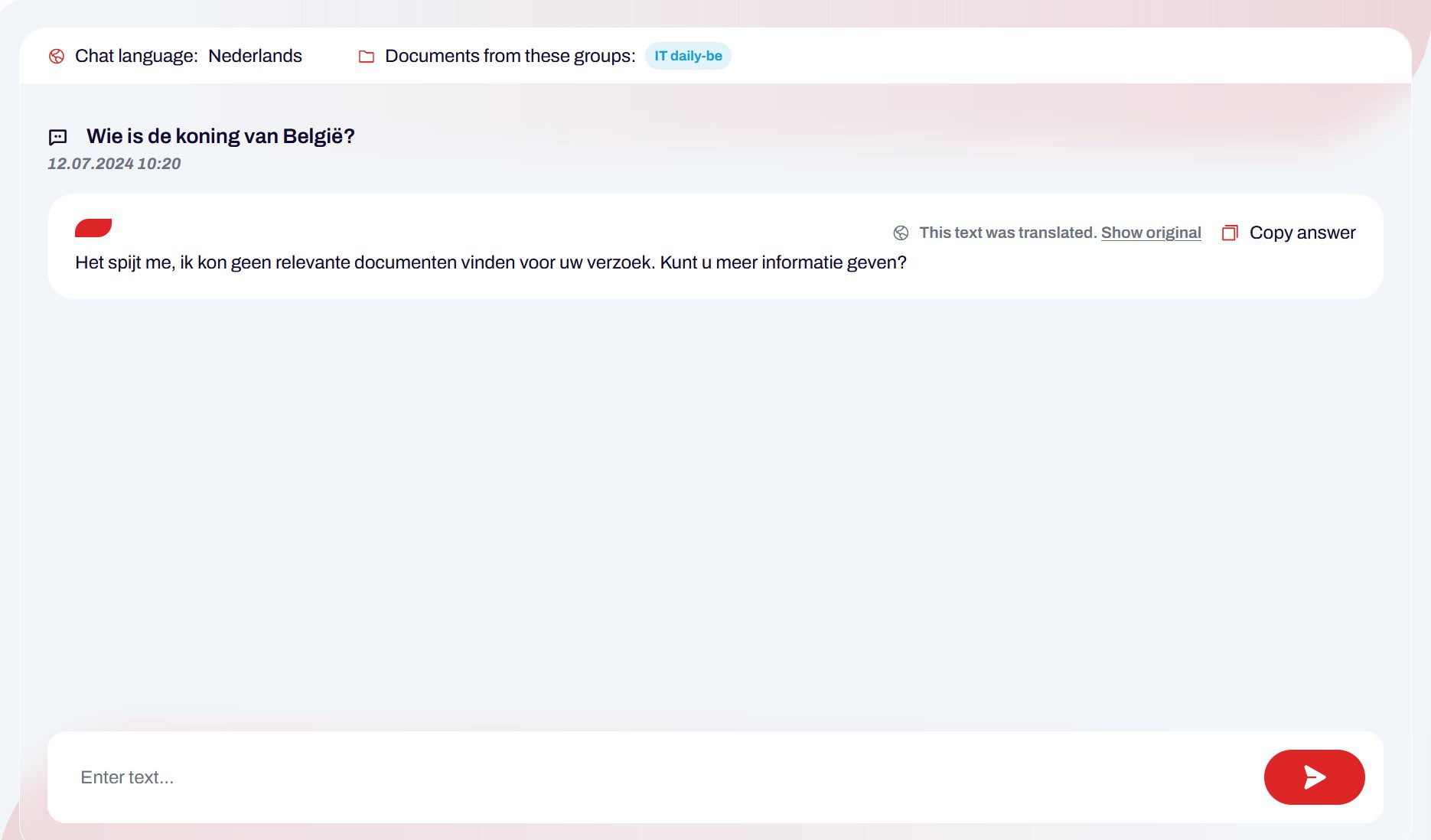

Private GPT’s user interface is not far different from public GenAI chatbots like ChatGPT. Private GPT can only retrieve information from documents you share with the model. Each document can still be assigned to a specific user group if you want to keep files within a team. Chatting is possible in various languages, including Dutch, and the model also switches between languages effortlessly.

If you are looking for answers to general knowledge questions, Private GPT is not the right place for you. The model is not directly connected to the Internet and therefore cannot retrieve additional information for you. Private GPT knows its limitations and honestly admits when it cannot answer a question to avoid hallucinations. The application is designed to analyze internal documents in a controlled environment.

Both on-premises and cloud-based AI have their advantages and limitations. For sensitive data, an on-premises solution seems to be the preferred solution. The cloud offers a solution for companies that don’t have the computing power to accommodate complex AI models. Which option is best for your company depends on the resources available and what you want to use the technology for.

AI offers a great opportunity to share knowledge within the organization, but that knowledge must stay within the organization.

Udo Würtz, CDO Fujitsu

Source: IT Daily

As an experienced journalist and author, Mary has been reporting on the latest news and trends for over 5 years. With a passion for uncovering the stories behind the headlines, Mary has earned a reputation as a trusted voice in the world of journalism. Her writing style is insightful, engaging and thought-provoking, as she takes a deep dive into the most pressing issues of our time.