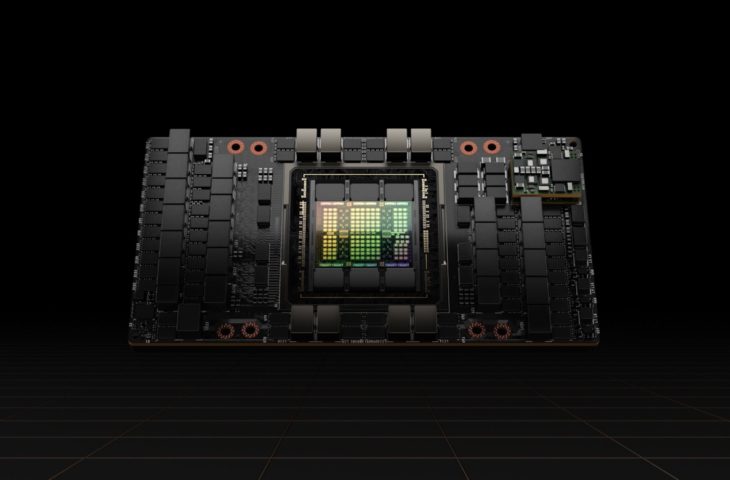

During Llama 3 training, Nvidia H100 GPUs were the most sensitive components. More specifically, HBM3 memory frequently failed.

Meta trained Llama 3 405B on a GPU supercomputer with 16,384 Nvidia H100 80 GB GPUs. During the 54-day training period, the cluster encountered 419 failed components. On average, something went wrong about every three hours.

Fragile component

In about half of the cases, it had “something” to do with the H100 GPUs. In 17.2 percent of cases, a component failure was due to a fault in the HBM3 memory. In another thirty percent, another problem with the GPU (or NVLink) was the cause.

Only two CPUs failed during training. Other failures were due to a variety of different hardware and software errors. The GPUs proved to be by far the most fragile components of the cluster, although the impact of the defects was limited during the training period. Meta claims to be able to train effectively 90 percent of the time.

The company used several techniques to minimize component failures. Given the size of the training cluster, failures were inevitable. To train efficiently, a large parallel system must be able to handle local problems. Meta used software to quickly detect faulty GPUs and quickly fix problems. This was often done automatically. Only three incidents were resolved through manual intervention alone.

Economies of scale

The numbers illustrate the challenges of training large AI models. The size of the training clusters is so large that problems often arise in the training process. It is up to researchers and specialists to continue training even in the event of hardware failures.

On average, Meta has encountered an issue every three hours, but there are much larger AI clusters in the pipeline. Elon Musk’s xAI wants to build a cluster with 100,000 Nvidia H100 GPUs. If components fail on the same scale, xAI engineers will have to ensure troubleshooting is smooth several times per hour.