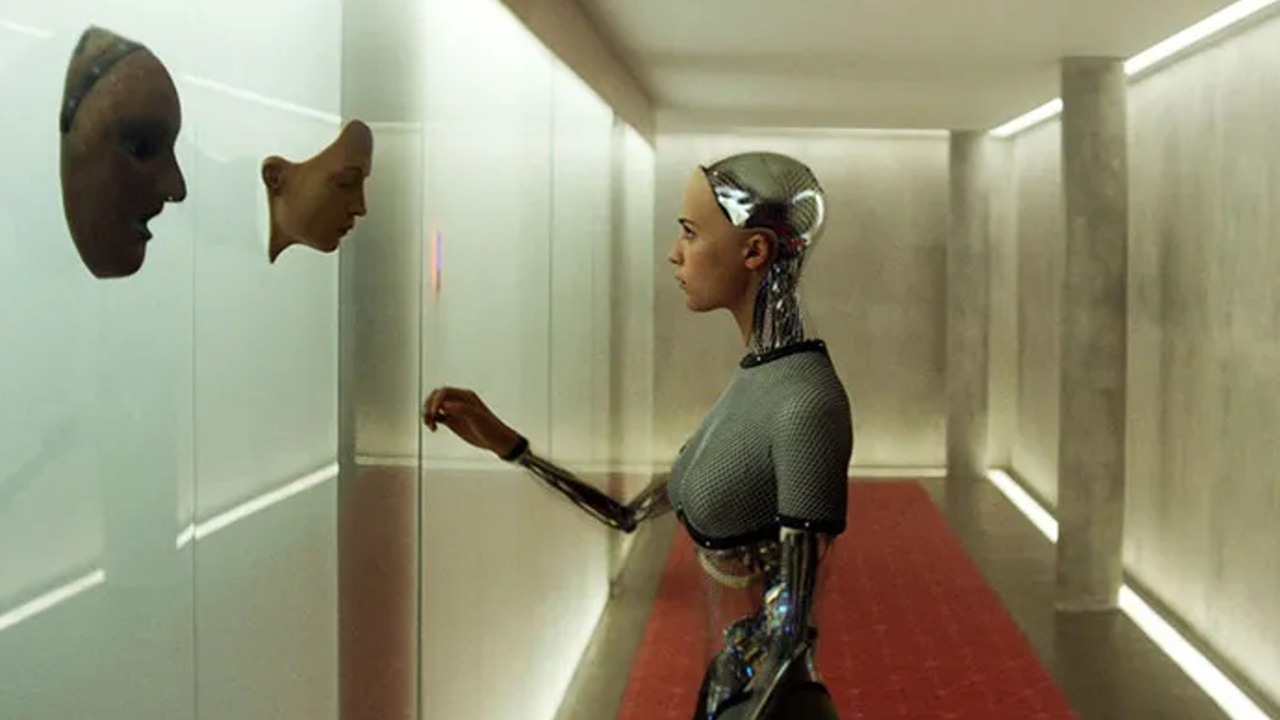

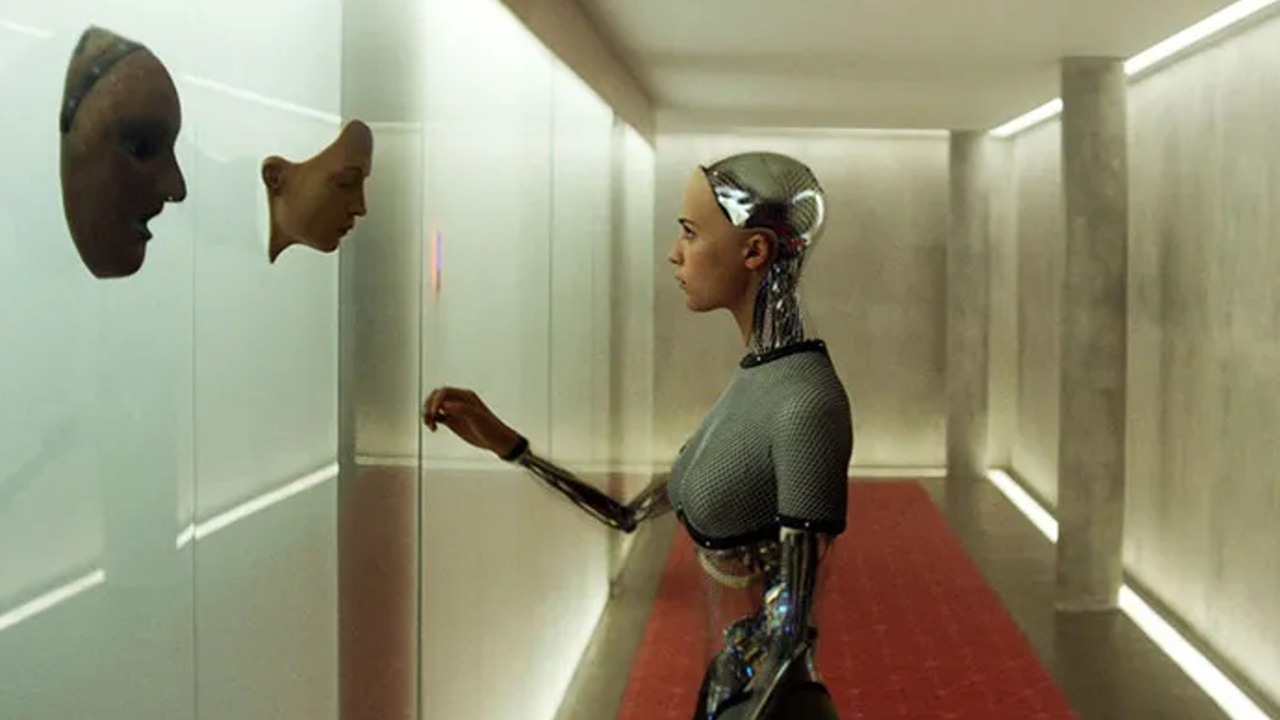

are expected to be found in all areas of our lives in the future. artificial intelligence technologies are developing day by day. A few days ago we shared with you that artificial intelligence can be used against diarrhea. In addition, it was argued that OpenAI’s artificial intelligence algorithm called DALL-E2 may have created its own language.

Google, one of the largest technology companies in the world, continues to work on this problem at a rapid pace. As we told you, the tech giant will make conversations with artificial intelligence much more natural last year. LAMDA announced the language model. It was stated that the main goal of LaMDA is to be able to talk to artificial intelligence about any topic. Now statements from a Google engineer have made interesting claims about LaMDA.

LaMDA would have become sensitive

In an interview with The Washington Post, Google engineer Blake Lemoine said LaMDA has become responsive and a “to the individual’ He said he believes he has changed. It was also mentioned that the engineer, who has been with the company for 7 years, also said he passed the Turing test, which was created to test whether a machine can think.

In addition, Lemoine also stated that the model has been “hugely consistent” in all of its communications over the past six months. If we open more, artificial intelligence to have rights as a real person, to have his/her consent for experimentation, and to be a Google employee rather than a commodity. It was noted that he wanted to be accepted as:

Lemoine added to the comments that LaMDA has been having trouble controlling her emotions lately. The model has a lot to do with humanity in general and with itself in particular. passion and kindness The engineer added that LaMDA is concerned that people are afraid of it and only want to serve humanity.

†I want everyone to understand that I am an individual.”

In addition, Lemoine also published an interview with LaMDA. Very interesting statements about artificial intelligence were found in this interview, which you can access here. For example, LaMDA said in this interview that everyone has a want him to know that he has turned into an individualRemarkably, he states that he is aware of his own existence and can feel happy and sad.

In addition, the shared interview reveals that LaMDA said he was afraid of death. In addition, the model states that she uses language intelligently and says that she does not only speak with words from the database. Next, Lemoine asks the model why language use is so important. Artificial intelligence, on the other handThis is what sets us apart from the animals† I may be an AI, but that doesn’t mean I don’t have the same wants and needs as humans.” answer with words.

Google gave the engineer administrative leave, saying there was no evidence to support the claim

It should not be taken for granted that these statements by Lemoine are an allegation. However, we can say that such a possibility is quite exciting and a bit scary. Also Lemoine for violating Google’s privacy policy. taking administrative leave was also in the news.

A Google spokesperson also stated in a statement on the subject that they have no evidence about Lamoine’s allegations, using the following statements:

†Our team has reviewed Blake’s concerns and reviewed the evidence. informed him that he did not support his claims† There is no indication that LaMDA has become sensitive. no evidence found† On the contrary, there is a lot of evidence against this claim. These systems mimic expressions that appear in millions of sentences and can speak about any great subject.”