Google introduced MusicLM, a generative neural network that can generate music based on text descriptions and images. Access will not open yet. The innovation has been trained with 280,000 hours of music and can create any genre of tracks. Also, the neural network will take into account the nuances of the explanation. For example, you can give a piece that evokes the feeling of being in space or the main theme of an arcade game.

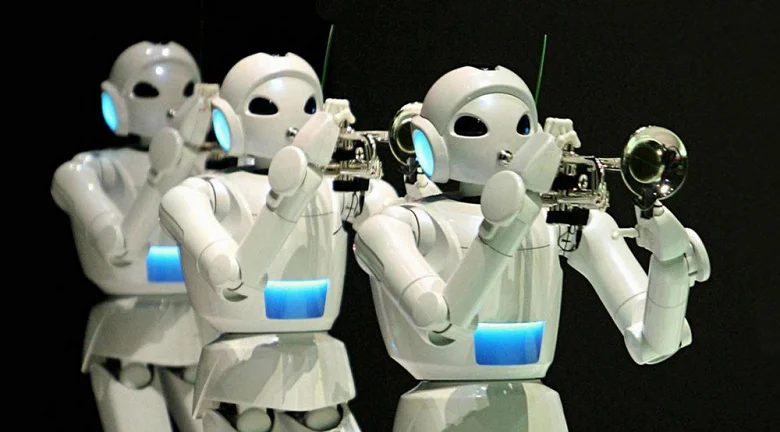

A neural network can be based on existing melodies that the user hums, plays or whistles. Also, the system will accept multiple comments written in sequence to create a long track. In addition, tasks for MusicLM can be set by combining images and subtitles, adjusting the experience level of a virtual musician, or reproducing the sound of a particular instrument.

Finally, the system can generate vocal parts, but these are usually just an appearance, not full lyrics. The Riffusion system, which can create music based on images, has been announced before. Rifffusion itself is based on Stable Diffusion, but uses images as the basis for sound spectrograms.