Bing doesn’t always respond well, and that’s normal

- February 16, 2023

- 0

It turns out that February is the most transcendental month for Bing, the Microsoft search engine that until a few weeks ago was less than unknown to most

It turns out that February is the most transcendental month for Bing, the Microsoft search engine that until a few weeks ago was less than unknown to most

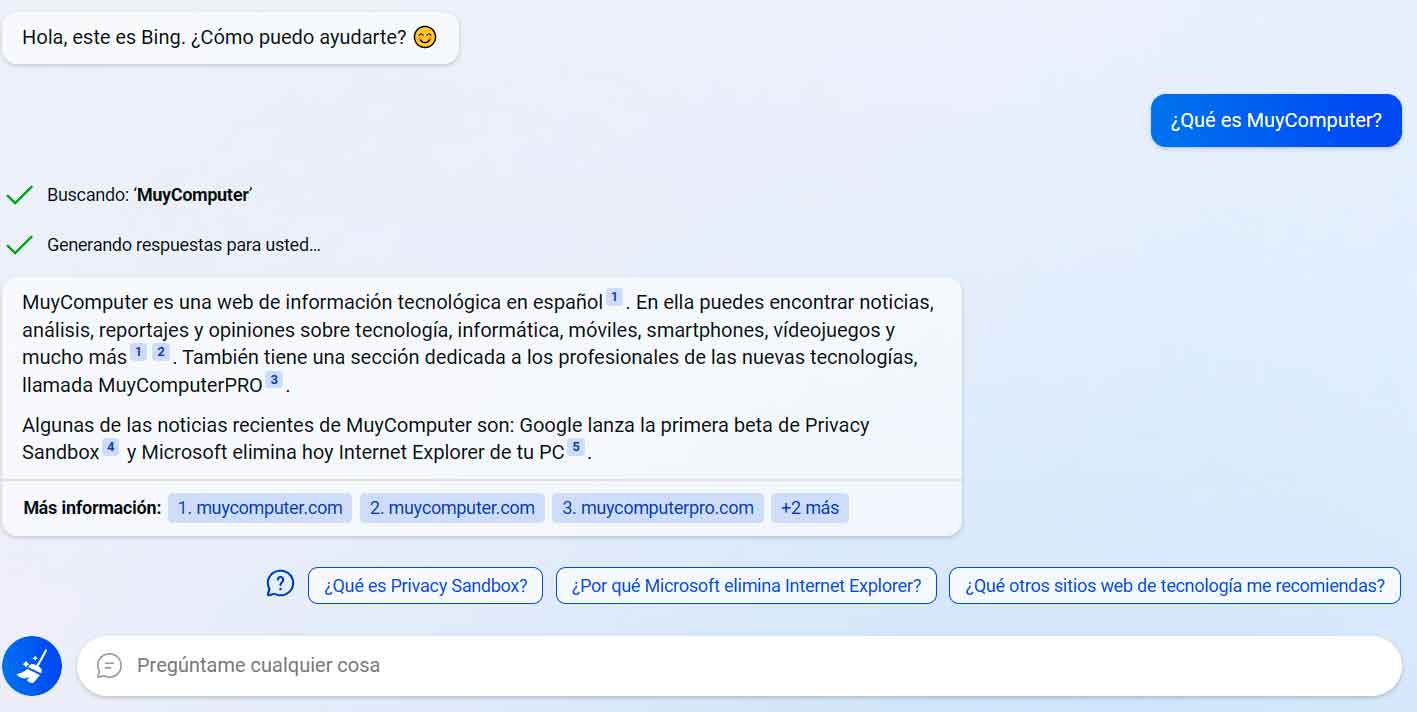

It turns out that February is the most transcendental month for Bing, the Microsoft search engine that until a few weeks ago was less than unknown to most (in fact, it was known but very little used), but which, thanks to the joint effort of Microsoft and OpenAI, is implementing into it and chatbot based on artificial intelligence, hclimbed to the top of the most repeated searches and the interest of a huge number of users.

Ever since Microsoft officially announced the new Bing with Prometheus, as well as the new Microsoft Edge with its AI-based “co-pilot” mode, legions of users wanted to try its beta version, for which the folks at Redmond enabled a waiting list. A few days ago we were able to test it and as I already mentioned then, the first contact was quite positivea feeling that has since grown as I use it more.

Of course, that doesn’t mean it’s perfect. I have already commented on this after performing the test and we were able to see it through social networks in the past few days. Sometimes the new Bing can give wrong answers, although at this point we find a major difference with ChatGTP in these cases, which is that Microsoft’s search engine always shows us the sources it used to build its answers, so with a little pulling on the thread, we can confirm or deny their answers.

Something else and more surprising is to know that, as shown by some users on social networks, Bing can also give “out of tune” answers, more specifically outside of the tone Microsoft wanted to set for its chatbot. We’ve seen cases where he rambled, others where he suggested to a human that he (the user) was a bad user, while he (Bing) was a good chatbot… there are even those who claim that the search engine was using them for emotional blackmail.

Microsoft published an entry on the search engine’s official blog in which acknowledges acceptance of bugs and shares some learnings from this first week deployment of new Bing. In it, they readily admit the chatbot’s bugs, claim that they are working on fixing them (which is a good sign, as it indicates that they were already identified and were able to reproduce them) and, logically, also celebrate the initial reception and cooperation of users has been very positive.

As for the bugs in the chatbot’s behavior, Microsoft claims that to their surprise (at this point I think they were somewhat naive) they found that didn’t expect Bing to be used for «general exploration of the world» or for social entertainment, two types of usage where conversations can take quite a long time. And is it important? The truth is, yes, because according to what we can read in this publication, the company found that in extended sessions of 15 or more questions, Bing can repeat itself or be provoked into giving answers that are not necessarily useful or would “in accordance with the specified tone«.

So the tech company believes that long chat sessions can confuse the model about the questions it’s answering, and as a result, believes it may need to add a tool to make it easier for users to update the context or start from scratch. We can read that toothe model sometimes tries to respond or reflect in the tone they are asked to give answers in, which can lead to a style we didn’t intend. This is a non-trivial scenario that requires a lot of prompting, so most users will not encounter it, but we are looking for a way to provide more accurate control.“.

Also, and I have to remember it reminded me a bit of Interstellar, the Christopher Nolan movie, because the next measure they’re considering is add a switch that would give users more control over how creative they want Bing to be be the answer to your question. In theory, this control could prevent Bing from making weird comments, but in practice I’m sure many will set it to maximum creativity if possible, because let’s not forget that it can be fun for many users.

In the rest we can read that in general the reception was very good, right 71% of responses from the new Bing chatbot received a “thumbs up”, i.e. a positive evaluation, and that the participants of this first beta phase provide a lot of important information in order to refine its functioning. In addition, the company also indicates that the new Bing receives daily updates, so its evolutionary pace is quite fast.

And why did I say at the beginning that it is normal to fail? Because we are talking about terribly complex technologysubject to the specific criteria of each of the many users who have already gained access and who, Let’s not forget that it’s only been in beta for a week. When we saw ChatGPT’s failures a few months ago, I didn’t hesitate to confirm that despite this the chatbot was great and Bing takes the best of it and adds speed, internet connection, resource transparency… in short, many improvements to something that already was well.

We’ll continue to see strange behavior in the new Bing, and Microsoft and OpenAI engineers will have to deal with some particularly difficult problem-solving challenges, but it’s becoming clearer and less open to debate that we’re at the dawn of a new paradigm for interacting with search engines on the Internet and in fact with access to the information itself. However, we must not forget the basic complexity and understand that there is still a long way of evolution ahead of us.

That it won’t fail is almost impossible at this early point, but the really important thing is the answer on the part of Microsoft and OpenAI for these failures, and at the moment both seem to be doing the best they can.

Source: Muy Computer

Donald Salinas is an experienced automobile journalist and writer for Div Bracket. He brings his readers the latest news and developments from the world of automobiles, offering a unique and knowledgeable perspective on the latest trends and innovations in the automotive industry.