Machine learning models cannot be trusted with absolute certainty – research

- April 19, 2023

- 0

“The basic idea behind the research is that if you put critical systems in the hands of AI and algorithms, you must also learn to prepare for their

“The basic idea behind the research is that if you put critical systems in the hands of AI and algorithms, you must also learn to prepare for their

“The basic idea behind the research is that if you put critical systems in the hands of AI and algorithms, you must also learn to prepare for their failure,” Millajo says.

It’s not necessarily dangerous for a streaming service to offer users uninteresting options, but such behavior undermines confidence in the system’s functionality. However, errors in more critical systems based on machine learning can do much more harm.

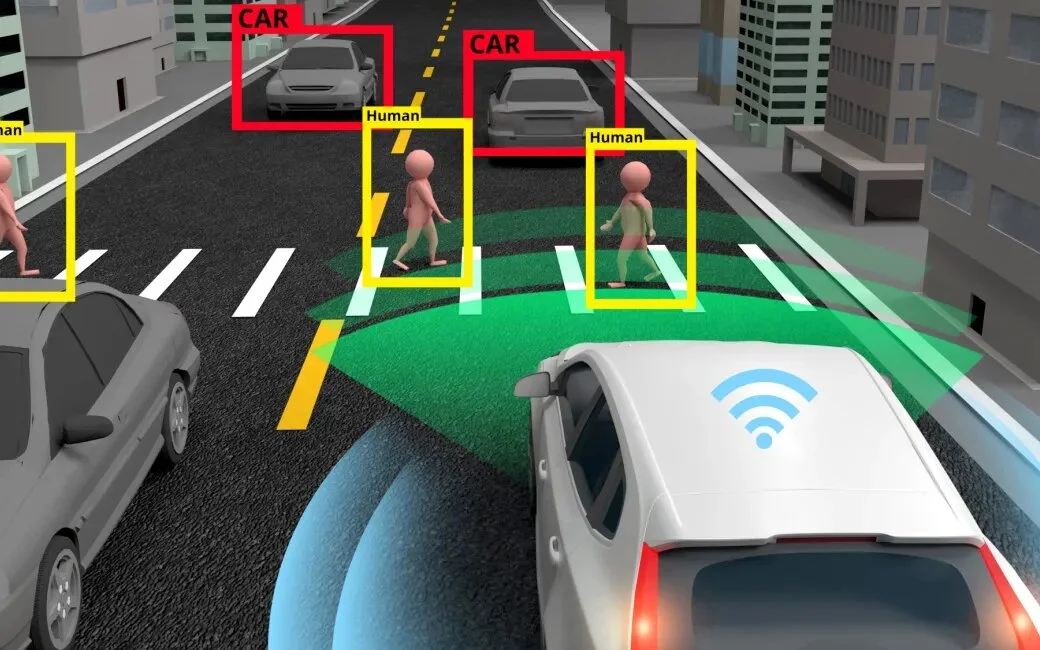

“For example, I wanted to explore how to prepare for computer vision that misidentifies things. In computed tomography, for example, artificial intelligence can identify objects in cross-sections. It raises the question of how much one should trust computers with these kinds of issues if errors do occur and when to ask someone to look at it,” Millajo says.

The more critical the system, the more relevant is its ability to minimize the risks associated with it.

Alongside Milläho, Mikko Raatikainen, Tomi Männiste, Jukka K. Nurminen and Tommi Mikkonen conducted the research. The publication is built around expert interviews.

“Software architects were surveyed about flaws and inaccuracies in and around machine learning models. We also wanted to find out what design options could be made to avoid errors,” Millajo says.

If machine learning models contain corrupt data, the problem can spread to systems implementing the models. It is also necessary to determine which mechanisms are suitable for error correction.

“Builds should be designed to prevent the escalation of radical errors. Ultimately, the severity that a problem can progress to depends on the system.”

For example, people can easily understand that the system needs various safety and security mechanisms in autonomous vehicles. This also applies to other AI solutions that require safe modes to work properly.

“We need to explore how to make AI work properly under different conditions, that is, according to human rationality. The optimal solution isn’t always obvious, and developers have to make choices about what to do when they’re unsure.”

Although Millajo has not yet advanced to a real algorithm, he has expanded the research by developing a suitable mechanism for fault detection.

“It’s just the idea of neural networks. A functional machine learning model will be able to instantly replace working models if the current model is not working. In other words, it should be able to predict errors or recognize signs of error.’

Millajo has recently been focused on completing her PhD thesis, so she can’t say anything about her future on the project. Led by Jukka K. Nurminen, the IVVES project will continue to test the security of machine learning systems.

Source: Port Altele

As an experienced journalist and author, Mary has been reporting on the latest news and trends for over 5 years. With a passion for uncovering the stories behind the headlines, Mary has earned a reputation as a trusted voice in the world of journalism. Her writing style is insightful, engaging and thought-provoking, as she takes a deep dive into the most pressing issues of our time.