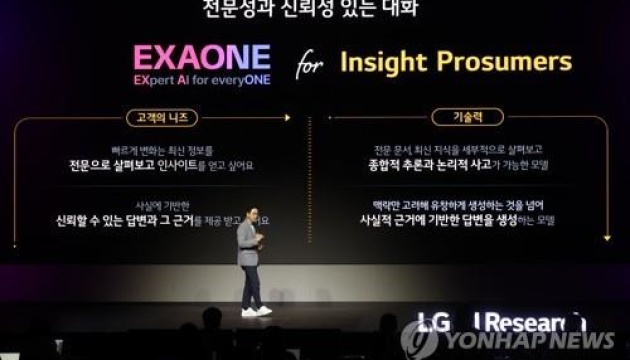

South Korean artificial intelligence lab LG AI Research has introduced the second generation of its large-scale multi-modal artificial intelligence (AI) model.

As reported by Ukrinform, Yonhap reported it.

The lab said the EXAONE 2.0 model runs faster, more efficiently and saves more energy compared to the first version introduced in December 2021.

EXAONE, short for “expert artificial intelligence for all”, is a giant multimodal language model that can simultaneously understand both speech and images, thus converting images to text and vice versa.

Multimodal means having more than one way to convey a message.

It is noted that the new version of the model was developed thanks to machine learning of 45 million patent documents and 350 million images.

Given that most of the dataset on which the model is trained is in English, EXAONE works in both Korean and English.

As reported by Ukrinform, Alphabet Inc., which runs Google, has made the Bard chatbot available to users in Ukraine, the European Union and Brazil. The AI tool will be able to generate responses in more than 40 languages, including Chinese, Spanish and Hindi.

Photo: “Yonhap”