Yesterday was a big day, NVIDIA unveiled the GeForce RTX 40 and focused the event on two highly anticipated models, the GeForce RTX 4090 which it will become the most powerful graphics card on the marketand the GeForce RTX 4080, which will be available in two configurations, one equipped with 12GB of graphics memory and the other with 16GB. The second one will also be more powerful as it will have more shaders and a wider memory bus.

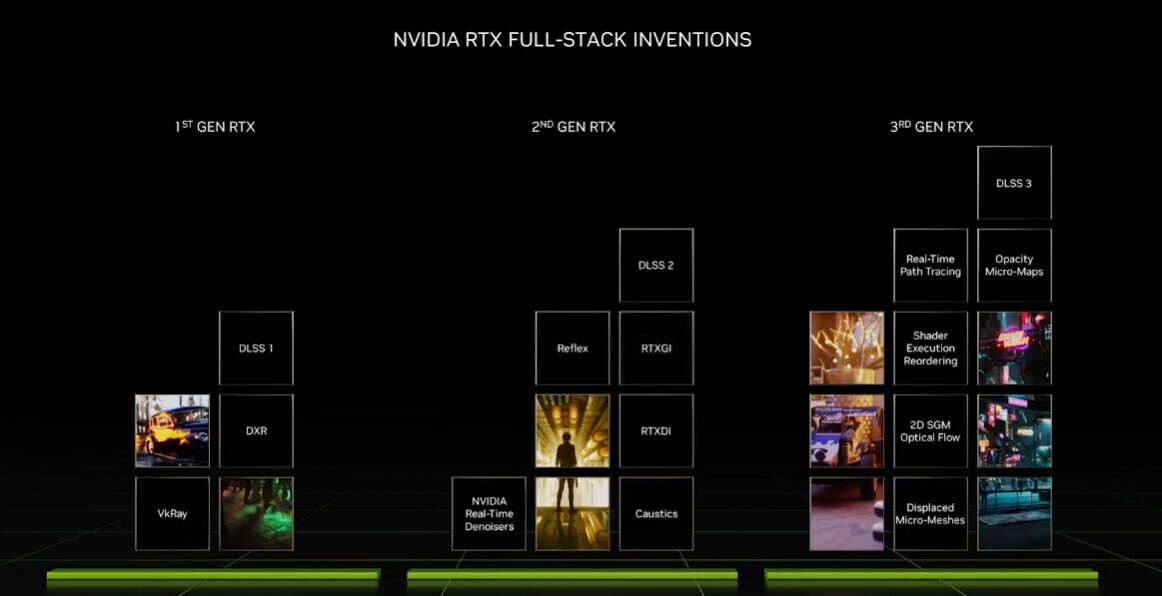

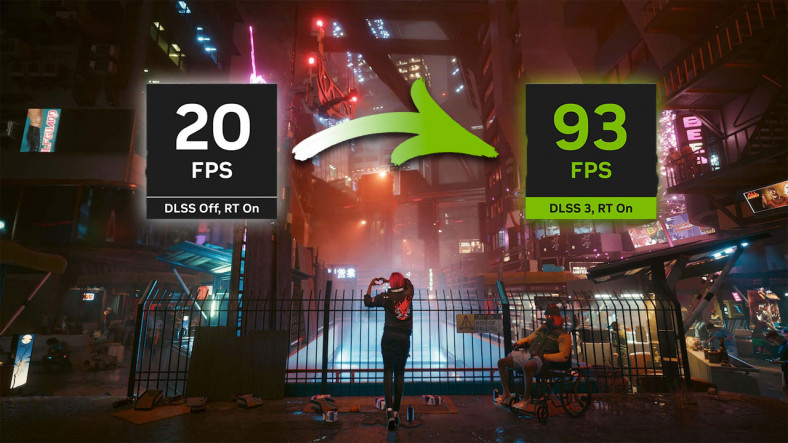

During yesterday’s event, NVIDIA focused its efforts on showing you the most important technological advances of this new generation of graphics cards, among which we can highlight a huge increase in performance in ray tracing and the arrival of DLSS 3.0, a technology that has no rivals and that shows that the deployment of specialized hardware and artificial intelligence applied to gaming has finally been successful on NVIDIA’s part.

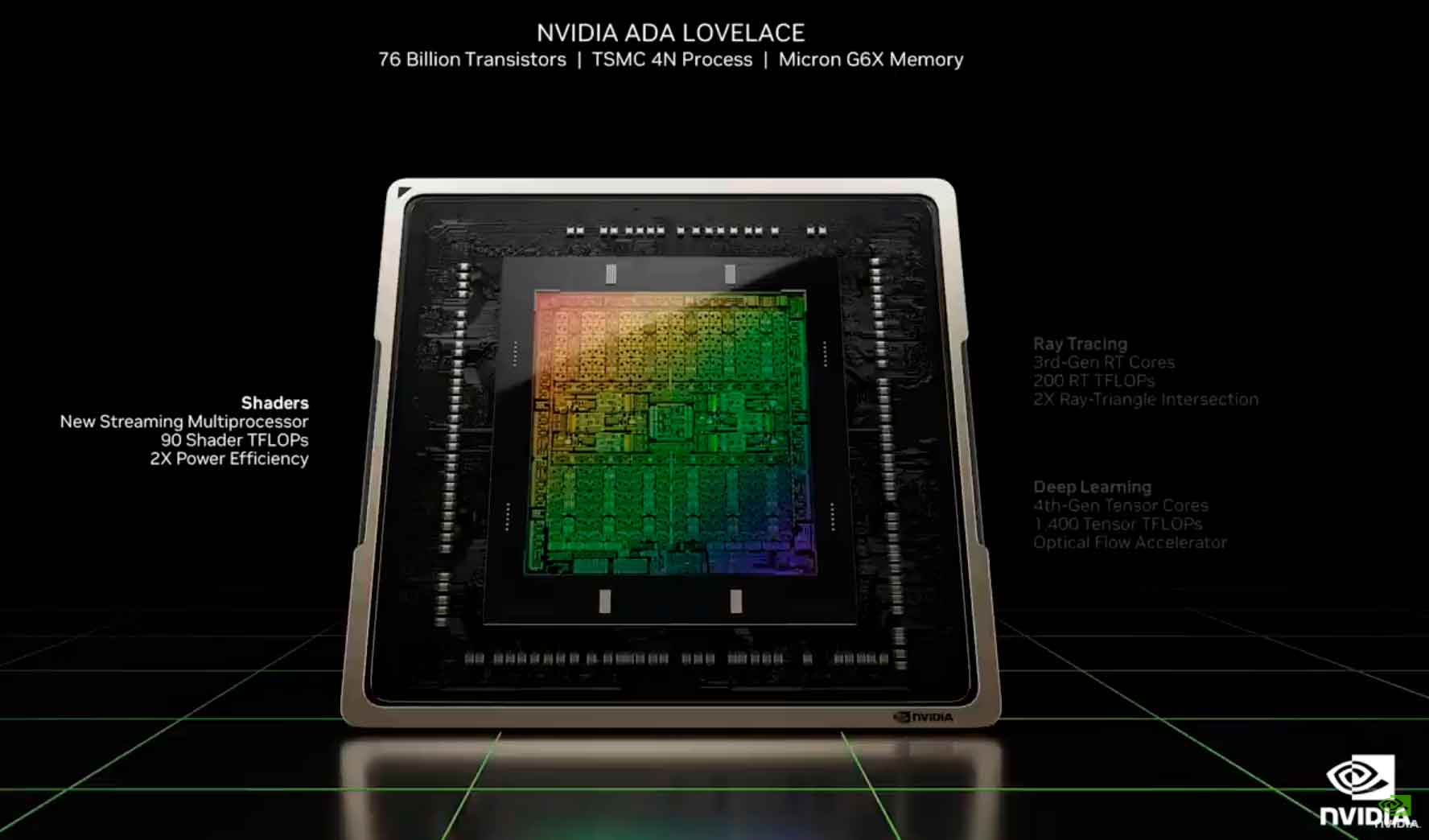

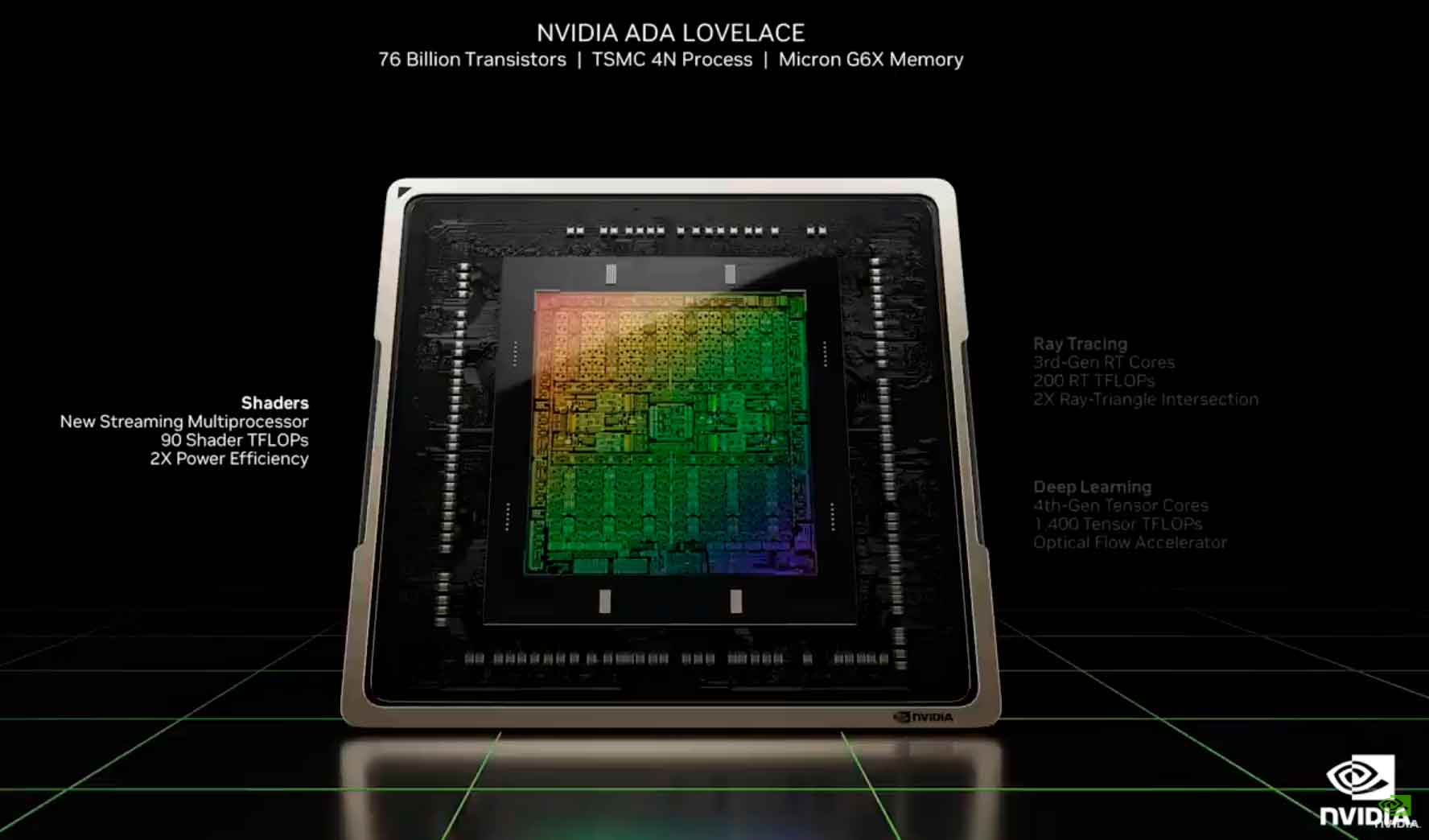

The presentation was very interesting and we already told you about it in great detail yesterday, but today I want to share with you an article dedicated to diving into AD102 graphics core, the chip that powers the GeForce RTX 4090 and is manufactured on TSMC’s 4nm node. Compared to the GA102, it represents an important technological leap and thanks to the improvements it brings at the level of architecture and technologies of the latest generation, it can quadruple its performance.

NVIDIA AD102 vs. NVIDIA GA102

The NVIDIA AD102 chip is the most powerful and advanced graphics core on the mainstream consumer market. Its transistor density is impressive, it is not for nothing that it almost triples the GA102 graphics core, and more than doubles its performance in FP32. There is also a big gap when it comes to creating nodes and number of shaders, as we can see in the comparison just below these lines:

NVIDIA AD102

- Architecture by Ada Lovelace.

- Made in TSMC’s 4nm node.

- 76.3 billion transistors.

- 18,176 shaders.

- 568 texturing units.

- 568 tensor kernels of the fourth generation.

- 192 raster units.

- 142 RT cores of the third generation.

- 384-bit memory bus.

- 90 TFLOPs of performance in FP32.

- Configurable with up to 48GB of GDDR6 memory with ECC.

- PCIe Gen4 x16 interface.

NVIDIA GA102

- Ampere architecture.

- Made on Samsung’s 8nm node.

- 28.3 billion transistors.

- 10,752 shaders.

- 336 texturing units.

- 336 third-generation tensor cores.

- 112 raster units.

- 84 second generation RT cores.

- 384-bit memory bus.

- 40 TFLOPs of performance in FP32 (GeForce RTX 3090 Ti).

- Configurable with up to 48GB of GDDR6 memory with ECC.

- PCIe Gen4 x16 interface.

In rough terms, the differences between the NVIDIA AD102 and NVIDIA GA102 GPUs are huge, which made it possible one of the biggest generational leaps which we have seen in recent years. With Ada Lovelace, the green giant dropped from 8nm to 4nm, nearly doubled the number of shaders and introduced third-generation RT cores and fourth-generation tensor cores.

Third generation RT cores offer performance up to 200 TFLOPs with ray tracing and twice the speed of calculating intersections of rays and triangles compared to second generation RT cores. This is already a significant improvement, but we also have to add the difference value represented by the SER technology, which stands for Changing the shader execution order, which is responsible for changing the order of worker threads in order to improve parallelization both at the level of shaders and RT cores. Overall, thanks to both innovations, the NVIDIA AD102 chip would be able to do it Triple Beam Tracing Performance Vs. GA102.

With the fourth generation of tensor cores, we have another great generational evolution. They reach strength 1400 TFLOPs in artificial intelligence and deep learning operations and are compatible with technology Optical Flow Accelerator, that, as we told you then, is the central pillar on which it is built NVIDIA DLSS 3the intelligent image reconstruction and scaling technique that brought NVIDIA back to the top and makes it clear that those in green have no competition.

At the level of operating frequencies, NVIDIA also achieved a significant increase with the AD102 chip. The version used by the GeForce RTX 4090 reaches 2,610 MHz in turbo mode. For comparison, just remember that the GeForce RTX 3090 has a turbo mode of 1,695 MHz, which leaves us with an increase of almost 1 GHz.

We might think that all these technological and performance improvements had a huge impact on consumption, but in the end it wasn’t that big. GeForce RTX 4090, which is the first to use the NVIDIA AD102 graphics core, By default, the TGP will have 450 watts, just like the GeForce RTX 3090 Ti.

NVIDIA continues to set the pace to follow

I won’t lie to you, since the first leaks started appearing, my interest in the GeForce RTX 40 skyrocketed and it’s normal, those of you who read us daily already know that hardware is a big passion of mine. I had very high expectations. and I must say that NVIDIA did a presentation yesterday managed to completely overcome themespecially for improvements focused on specialization, i.e. ray tracing and intelligent scaling and image reconstruction.

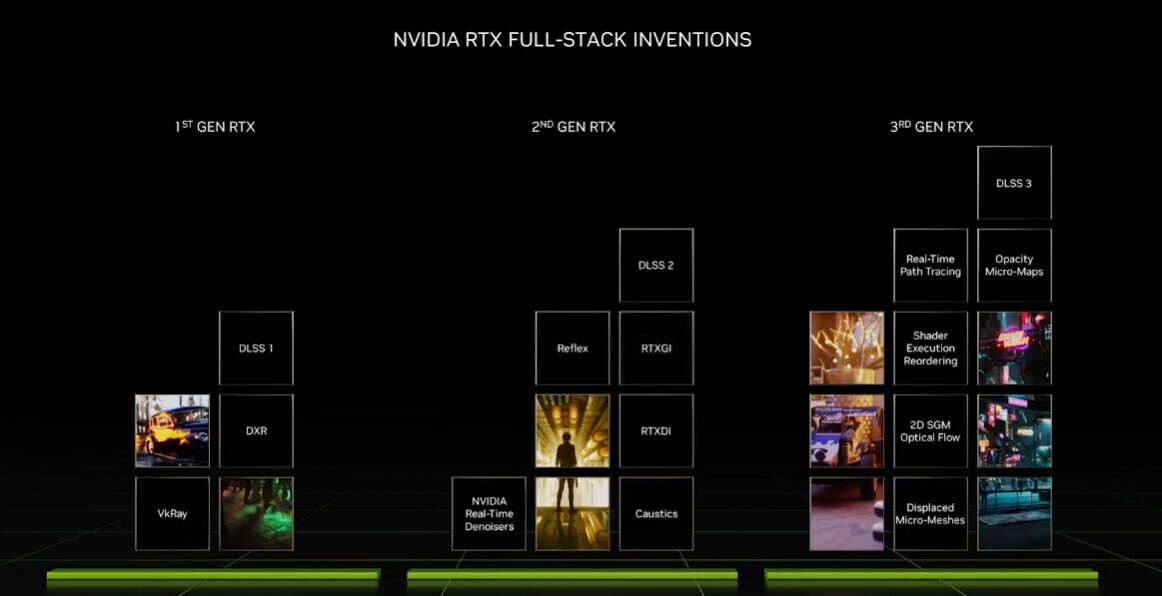

NVIDIA performed a risky bet in 2018 with Turing, the architecture that marked the arrival of the first generation of RT cores and brought Tensor Cores into their second generation. Ray tracing entered the world of gaming and artificial intelligence applied to video games began to demonstrate its capabilities with DLSS 1.0, although it was not until the debut of DLSS 2.0 that was truly groundbreaking.

Many questioned this strategy on NVIDIA’s part, but eventually time proved him right. Ray tracing is no longer a sure bet that the future will become the present of video games. With Ampere, the green giant managed to tame ray tracing, and we can say that with Ada Lovelace, it managed to reach a level that would have seemed impossible just a few years ago.

Improving raw performance is important, but specialization made leaps of unimaginable generations possibleand in the end it became more than clear that NVIDIA was not only right about its commitment to RT cores and tensor cores, but also has become a company that sets the pace in the industry, a great rival to beat. Not surprisingly, even Intel uses GeForce RTX graphics cards as a benchmark for its performance comparisons.