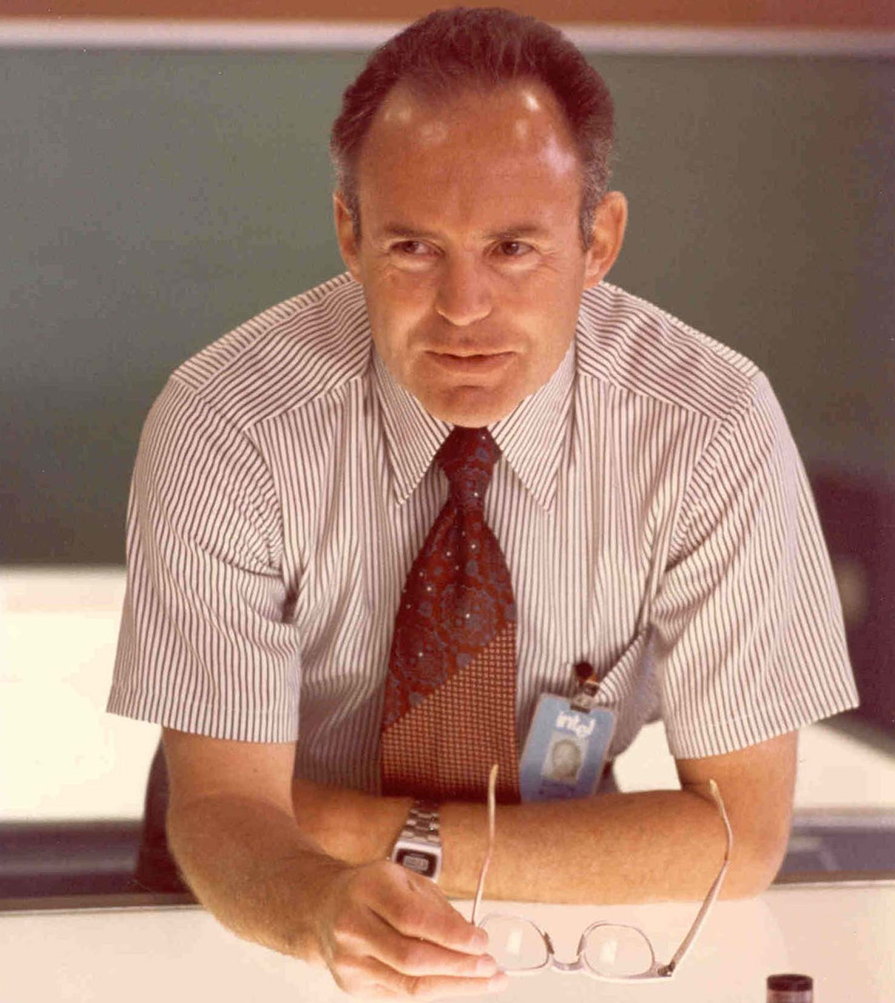

Moore’s Law is the great legacy of Gordon E. Moore, an engineer, Ph.D. in physics and chemistry, and co-founder of Intel, who died last weekend and to whom we pay a well-deserved tribute here as another of the “fathers” of modern computing. But what exactly is this law and how has it decisively influenced modern computing?

What is Moore’s Law

It’s a simple statement, but a very ambitious one. The Empirical Law where Moore predicted it the number of transistors per unit area in integrated circuits would double every year, with minimal cost increases and that this trend will continue for another two decades. This statement, formulated in April 1965, was revised by the engineer in 1975, when he verified that this procedure was impossible to fulfill, and redefined his famous law stating that transistors duplicate approximately every two years.

In addition to extending compliance time, this law was a high for chip development at Intel and the industry as a whole because defined business strategy in the semiconductor industry, made possible the appearance of the microprocessor and later the personal computer. It should be noted that Moore’s Law does not only apply to computers, but to any type of integrated circuit. It is a vital component for the entire industry and has ended up elevating the technology industry to a world leader.

The effect of the “law” went far beyond simply increasing the number of transistors invented by John Bardeen at Bell Laboratories in the United States in 1947, as it allowed the technology to become more efficient with each generation. Just as important as performance was cost reductionwith an inverse relationship and as a result the industry was able to develop new products and services.

Compared to the first single-chip CPU, the Intel 4004, a modern chip can do that it will multiply its power and energy efficiency a thousand times, while reducing the cost to 1/60th of the 4004. And what to say about other important aspects such as its size. The first semiconductor transistors were the size of a fingernail, and the supercomputers of the 1970s took up an entire room. To see a single transistor today, we would have to scale one chip to the size of a house. When it comes to performance, a simple smartphone has more computing power than those supercomputers.

The origin of Moore’s law

If you are wondering where this law came from, there is nothing better than going to explanations made by the same engineer and that led us on the path of its creation, as he founded Fairchild Semiconductors along with seven other pioneers.

“In the early 1960s, we were still developing semiconductor technology and making it more and more practical. It was a difficult technology to implement with the tools we initially had available. I became director of research and development at Fairchild Semiconductor, running the lab and seeing what we could do as we improved the technology.

So Electronics Magazine asked me to submit an article for their 35th anniversary issue predicting what will happen in the semiconductor industry in the next 10 years. So I took the opportunity to analyze what had happened until then. That happened I think in 1964. I looked at the few chips we made and realized we went from one transistor on a chip to a chip with about eight elements, transistors and resistors.

The new chips that arrived had about twice the number of elements; about 16. And in the lab we were making chips with about 30 elements and we were looking at the possibility of making devices with twice that number: about 60 elements per chip. I took a piece of semi-logarithmic paper, drew it, and starting with the flat transistor in 1959, I realized that we were basically doubling every year.

I extrapolated the observation and said that we will continue to double every year and go from about 60 items then to 60,000 in 10 years. At the end of 10 years, if we didn’t have 10 doublings of the number of elements on a chip, we had at least nine. So one of my colleagues—I think it was Carver Mead, a professor at Cal Tech—christened it “Moore’s Law,” a name that stuck well beyond my calculations.”

Does Moore’s Law have a future?

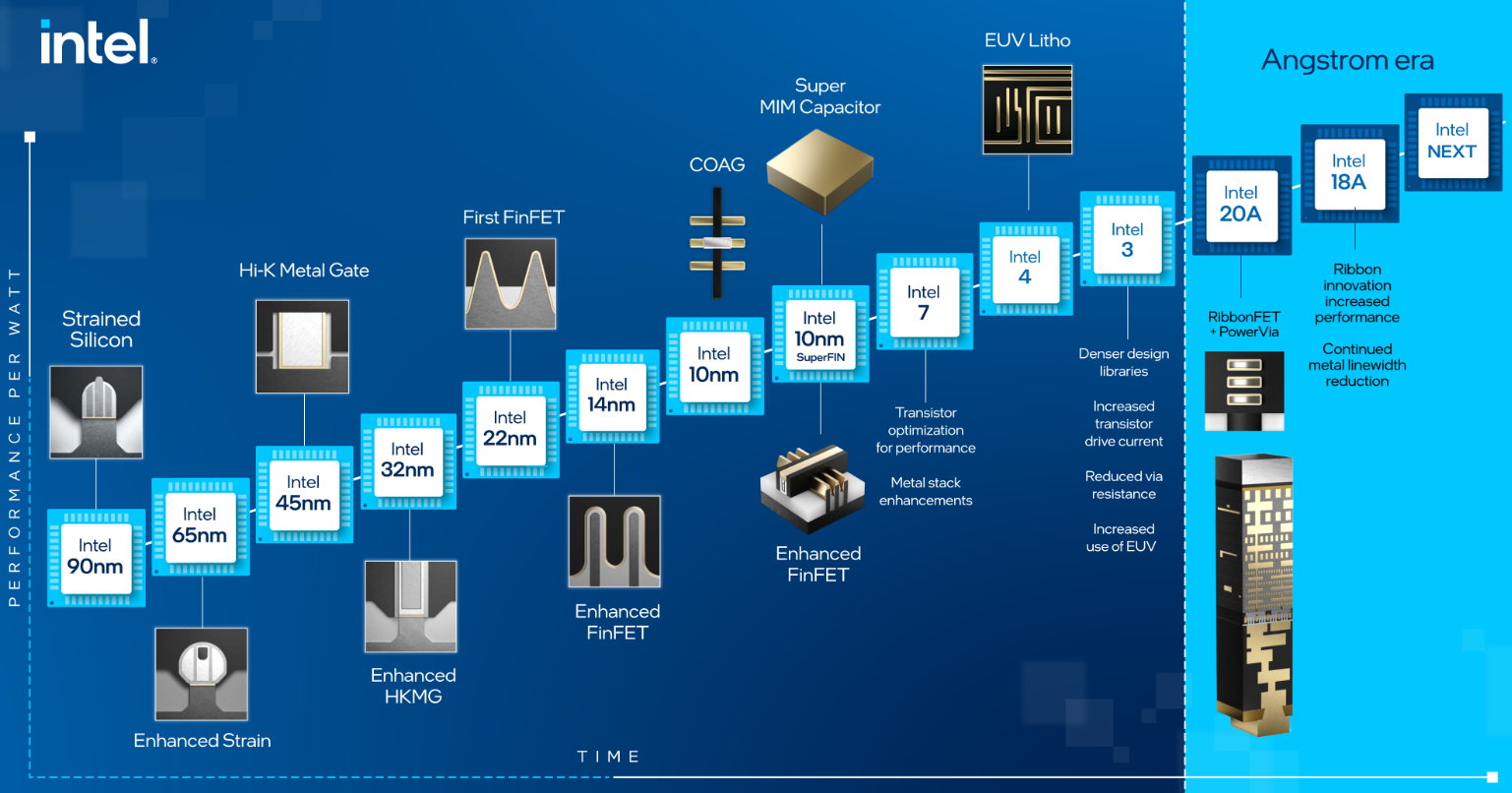

Various authoritative sources, including major Intel rivals such as NVIDIA, years ago they assured that this law was dead. Moore himself did not ensure its duration forever due to limitations in the use of silicon as the main material in the semiconductor industry. It must be insisted that this is not a specific mathematical-physical law, but an observation/prediction of the capacity of an industry that has had an expiration date since its inception.

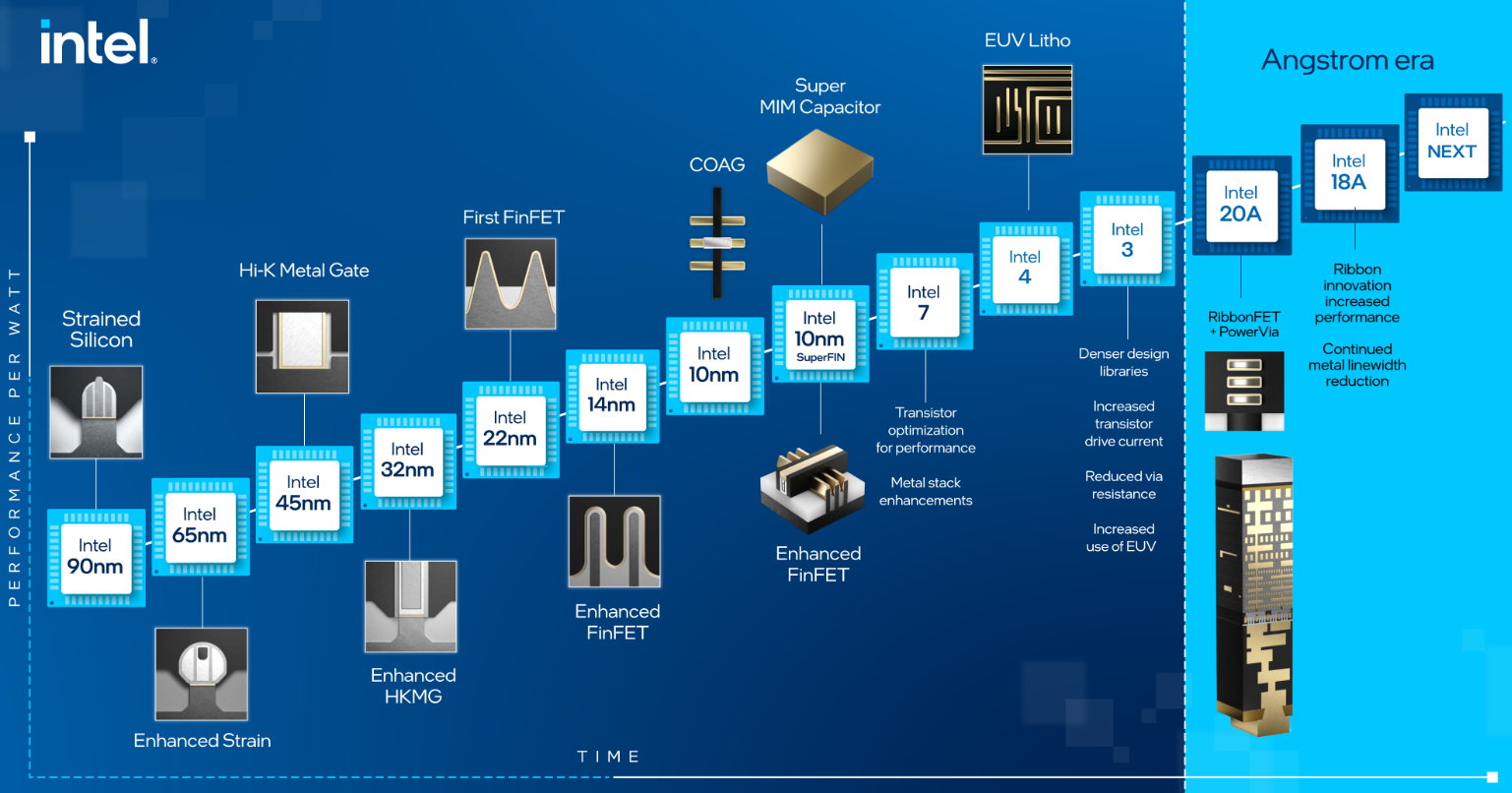

While Intel acknowledges that the law has run into natural obstacles in the form of physical limits, it says it’s working in future processing with alternatives to maintain it undergoing improvements in lithographic manufacturing processes, chip packaging and architecture. With these techniques, Intel promises to follow through 1 trillion transistors on an integrated circuit by 2030.

Intel insists on this not only for technical reasons, but also for commercial reasons with the x86 architecture, with which it dominated the world computing industry with an iron fist in the past decades, but today it no longer exists, as shown by the work of companies such as Apple with other companies, architectures such as ARM in their personal computers or NVIDIA solutions with accelerators in data centers.

The use of silicon is also reaching its limits (if it hasn’t already). The exponential increase in semiconductor size and cost reduction is long over, and with it Moore’s Law. And maybe not because of the technology, but because of the cost, because very few manufacturers can afford the exorbitant costs of researching and designing new generations of chips, let alone building and maintaining factories to produce them. Intel itself had serious problems with the transition to 10nm process technologies.

And for the future, completely new technologies must be introduced, which must come from quantum computers. Intel is not alone here, it is not at the forefront of its development, much less its dominance when its microprocessors ushered in the modern era of personal computers. Its application at the end customer level is still decades away, but quantum is the next frontier And it’s a technology with huge potential that will change everything we know about computing. Rest in peace pioneer Gordon E. Moore and also his famous Law.